Sakana AI launches the innovative adaptive language model Transformer², which uses a unique two-step dynamic weight adjustment mechanism that dynamically learns and adapts to new tasks during the inference process without expensive fine-tuning. This marks a major breakthrough in large language model (LLM) technology and is expected to change the way LLM is applied to make it more efficient, personalized and practical. Compared with traditional fine-tuning methods, Transformer² selectively adjusts key components of model weights through singular value decomposition (SVD) and singular value fine-tuning (SVF) techniques to optimize performance in real time, avoiding time-consuming retraining. Test results show that Transformer² is superior to the LoRA model in multiple tasks, with fewer parameters, and also demonstrates strong knowledge transfer capabilities.

The core innovation of Transformer² lies in its unique two-step dynamic weight adjustment mechanism. First, it analyzes incoming user requests and understands task requirements; then, through mathematical techniques, singular value decomposition (SVD) to align model weights with task requirements. By selectively adjusting the key components of model weights, Transformer² enables real-time optimization of performance without time-consuming retraining. This is in sharp contrast to the traditional fine-tuning method, which requires keeping the parameters static after training, or using methods such as low-rank adaptation (LoRA) to modify only a small number of parameters.

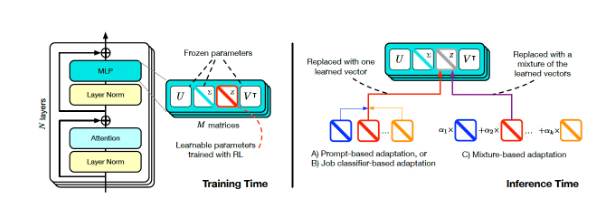

Transformer Square Training and Inference (Source: arXiv)

To achieve dynamic adjustment, the researchers adopted the Singular Value Fine Tuning (SVF) method. During training, SVF learns a set of skill representations called z-vectors from the SVD components of the model. When reasoning, Transformer² determines the required skills by analyzing the prompts and then configuring the corresponding z-vectors to achieve a response tailored to each prompt.

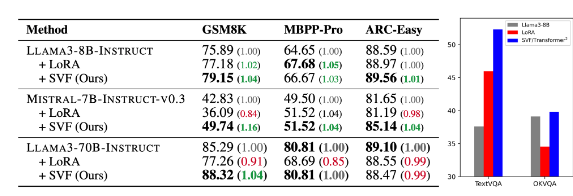

Test results show that Transformer² is superior to the LoRA model in various tasks such as mathematics, coding, reasoning, and visual question and answer, and has fewer parameters. More remarkable is that the model also has the knowledge transfer capability, that is, the z-vectors learned from one model can be applied to another, thus demonstrating the potential for widespread application.

Comparison of Transformer-squared (SVF in table) with the underlying model and LoRA (Source: arXiv)

Sakana AI released training code for the Transformer² component on its GitHub page, opening the door for other researchers and developers.

As enterprises continue to explore the application of LLM, inference customization technology is gradually becoming the mainstream trend. Transformer², along with other technologies such as Google’s Titans, is changing the way LLM is being used, enabling users to dynamically adjust models to their specific needs without retraining. This technological advancement will make LLM more useful and practical in a wider range of areas.

Researchers at Sakana AI said Transformer² represents a bridge between static artificial intelligence and life intelligence, laying the foundation for efficient, personalized and fully integrated AI tools.

The open source release of Transformer² will greatly promote the development and application of LLM technology and provide a new direction for building more efficient and personalized artificial intelligence systems. Its ability to learn dynamically and adapt to new tasks makes it have huge application potential in various fields and is worth looking forward to future development.