Google DeepMind's Gemini experimental version (Exp1114) has achieved remarkable achievements on the Chatbot Arena platform. In more than a week of community testing, it surpassed many competitors with an absolute advantage of more than 6,000 votes, and its comprehensive strength has been fully achieved. Show. The test results show that Gemini-Exp-1114 tied for the first place with GPT-4-latest in overall scores, and took the lead in many key areas such as mathematics, complex prompt processing, and creative writing, showing its powerful multi- abilities. This marks a significant increase in Google's competitiveness in the field of AI mockups.

Google DeepMind's latest experimental version of Gemini (Exp1114) has achieved remarkable results on the Chatbot Arena platform. After more than a week of community testing, data of more than 6,000 votes have been cumulatively shown that this new model surpasses its competitors with a significant advantage and shows amazing strength in multiple key areas.

In terms of overall ratings, Gemini-Exp-1114 tied for first place with GPT-4-latest with excellent scores of more than 40 points, surpassing the previously leading GPT-4-preview version. What is even more amazing is that the model has reached the top in core fields such as mathematics, complex prompts and creative writing, showing extremely strong comprehensive strength.

Specifically, Gemini-Exp-1114's progress is impressive:

From No. 3 to top in total ranking

Mathematical Ability Assessment rose from 3rd to 1st

Complex prompt processing climbed from 4th to 1st place

Creative writing performance has improved from 2nd place to 1st place

Visual processing capabilities also rank first

Programming level has also been improved from 5th to 3rd

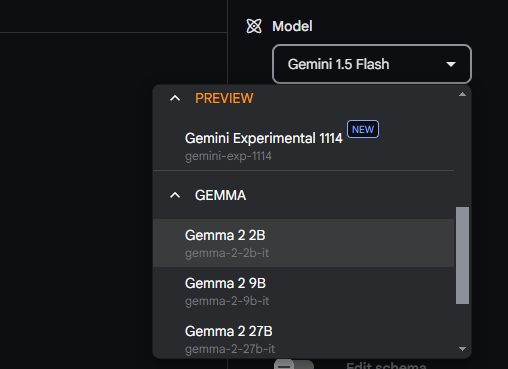

Google AI Studio has officially launched this new version for users to experience it in practice. However, the community also expressed concern about some specific issues, such as whether the limit of 1,000 tokens still exists, and how to deal with practical application issues such as ultra-long text output.

Industry analysts believe that this breakthrough progress shows that Google's long-term investment in the AI field has begun to reap the results. Interestingly, the model maintains its 4th ranking in style control, which may imply that the development team has adopted a new post-training approach instead of making changes to the pre-training model.

This major breakthrough has also triggered discussions on the industry structure. OpenAI used to launch new products when competitors released important updates, but this time Google's progress has attracted the attention of the industry. Some people believe that this may herald the arrival of Gemini2, and Google's competitiveness in the field of big models is significantly improving.

Gemini-Exp-1114's outstanding performance not only demonstrates Google's strong strength in the field of AI, but also provides new inspiration for the future development direction of large-model technology, which is worth looking forward to in the future.