The SD3Medium model will be open source soon! This powerful model with 2 billion parameters will be officially launched on June 12. It brings significant improvements in image generation, including more realistic textures, better composition, better performance, and greater fine-tuning capabilities. ComfyUI has completed the adaptation work in advance and is ready to welcome this new model. This means users will be able to more easily produce high-quality, detailed images of even complex scenes.

News from ChinaZ.com on June 11: On June 12, the SD3Medium model will be officially open sourced. At that time, everyone can experience more realistic texture, better composition, better performance and better performance when creating pictures. fine-tuning capabilities.

In order to welcome this important moment, ComfyUI has carried out adaptation work in advance and is ready to welcome the new model. Stable Diffusion3Medium is a powerful model with 2 billion parameters that has significantly improved in complexity and performance compared to previous versions, allowing it to handle more complex image generation tasks and provide users with Higher quality generated results.

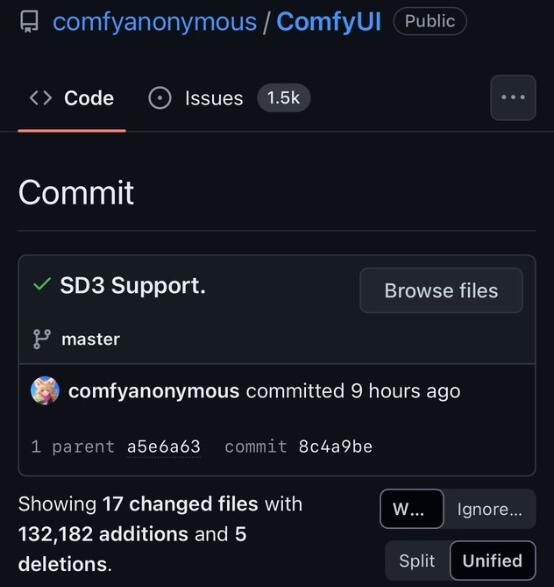

Details: https://github.com/comfyanonymous/ComfyUI/commit/8c4a9befa7261b6fc78407ace90a57d21bfe631e

It is particularly worth mentioning that SD3Medium performs well in text-to-image generation, especially in areas where previous generation models faced challenges. Users can expect to quickly generate detailed and highly realistic images with simple text descriptions.

Another highlight of SD3Medium is its ability to produce high-quality, detailed images. Regardless of the processing of color, light and shadow, or details, this model can achieve satisfactory results and accurately understand and realize the user's text description.

The open source of SD3Medium will bring new possibilities to the field of image generation, let's wait and see!