Tencent's latest open source video control technology MOFA-Video has attracted widespread attention in the industry with its powerful functions and convenient operation. It breaks through the limitations of traditional video animation production and provides unlimited possibilities for creative expression. This technology is not only a technological innovation, but also a complete subversion of the way video is created, which will profoundly affect many fields such as film production, game development, and virtual reality.

Tencent recently open sourced an impressive video control technology - MOFA-Video, which has completely changed our understanding of video animation production. This is not just a technology, but the beginning of a creative revolution.

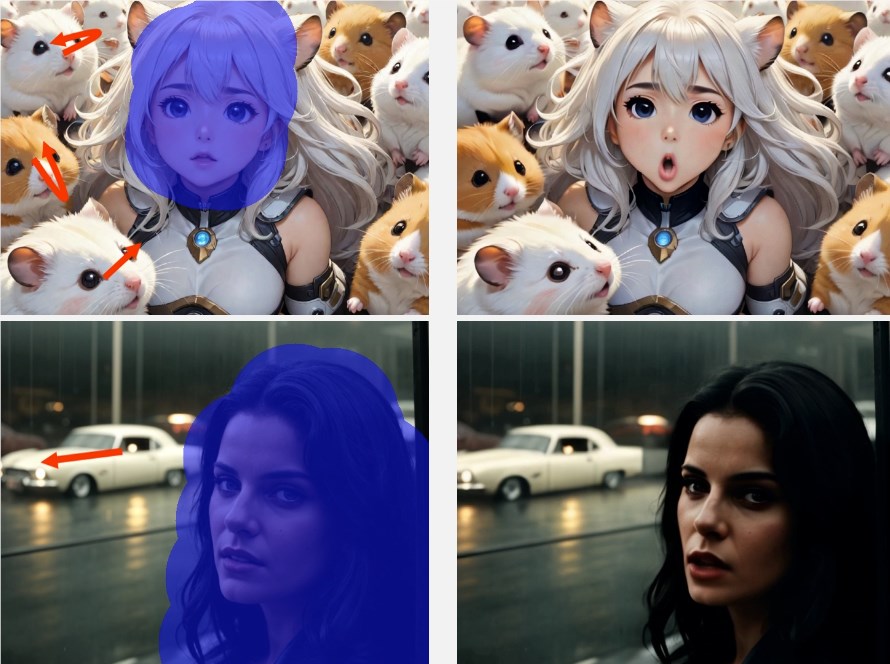

The magic of MOFA-Video is its versatility. Imagine if you could use arrows to control the movement direction of video content as freely as using a motion brush. What a magical experience it would be! MOFA-Video makes this a reality. What’s even more amazing is that it can also transfer facial expressions from one video to another newly generated face video, creating completely new characters and emotional expressions.

The multi-domain sensorimotor adapter is the key to MOFA-Video achieving these controls. These adapters enable precise control of motion during video generation, from subtle expression changes to complex action scenes.

A leap from static to dynamic, MOFA-Video transforms static images into lifelike videos through advanced motion field adapters (MOFA-Adapters) and video diffusion models. These adapters are able to receive sparse motion cues and generate dense motion fields, enabling sparse to dense motion generation.

Multi-scale feature fusion technology makes MOFA-Video more efficient in extracting and fusing features, ensuring the natural smoothness and high consistency of the animation. The stable video diffusion model provides MOFA-Video with the ability to generate natural motion videos.

The diversification of control signals allows MOFA-Video to adapt to various complex animation scenarios, whether it is manual trajectories, human body marking sequences or audio-driven facial animations.

Zero-sample learning capability is another highlight of MOFA-Video. This means that once adapters are trained, they can be immediately used on new control signals without the need to retrain for a specific task.

The design of real-time performance and high efficiency allows MOFA-Video to perform well in situations where animation needs to be generated quickly, such as real-time game animation, virtual reality interaction, etc.

The application prospects are unlimited, and MOFA-Video technology has broad application potential in film production, game development, virtual reality, augmented reality and other fields. It not only improves development efficiency, but also provides a broader space for creative expression.

With the continuous advancement of MOFA-Video technology, we have reason to believe that it will usher in a new era of animation and creative industries. Let us look forward to its unique charm and infinite possibilities in the future.

Project page: https://top.aibase.com/tool/mofa-video

The open source of MOFA-Video marks a new milestone in video animation production technology. Its powerful functions and convenient operation will bring revolutionary changes to the creative industry, and we are worthy of looking forward to its future development and application.