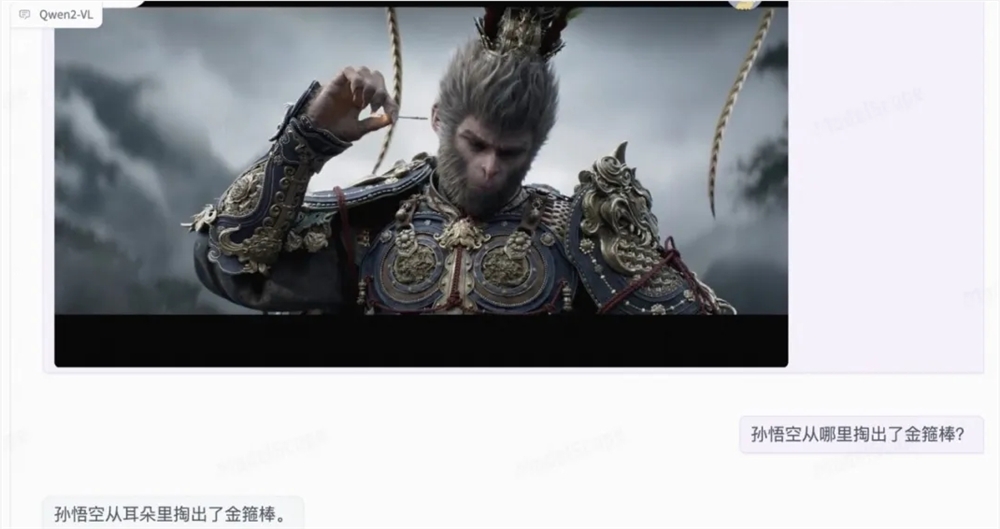

Alibaba Damo Academy released a major update to its multi-modal large-scale language model Qwen2-VL on August 30, 2024. This update makes significant advances in image understanding, video processing, and multi-language support and sets new performance benchmarks. The Qwen2-VL model not only improves the understanding of visual information, but also has advanced video understanding capabilities and integrated visualization agent functions, enabling it to perform more complex reasoning and decision-making. Additionally, expanded multi-language support makes it easier to use globally.

The Qwen2-VL model has achieved significant improvements in image understanding, video processing and multi-language support, setting a new benchmark for key performance indicators. New features of the Qwen2-VL model include enhanced image understanding capabilities that enable more accurate understanding and interpretation of visual information; advanced video understanding capabilities that enable the model to analyze dynamic video content in real time; and integrated visualization agent capabilities that transform the model into a A powerful agent for complex reasoning and decision-making; and expanded multi-language support, making it more accessible and effective in different language environments.

In terms of technical architecture, Qwen2-VL implements dynamic resolution support and can process images of any resolution without dividing them into blocks, thereby ensuring consistency between model input and inherent information of the image. In addition, the innovation of Multimodal Rotary Position Embedding (M-ROPE) enables the model to simultaneously capture and integrate 1D text, 2D vision and 3D video position information.

The Qwen2-VL-7B model successfully retains support for image, multi-image, and video inputs at 7B scale, and performs well on document understanding tasks and image-based multilingual text understanding.

At the same time, the team also launched a 2B model optimized for mobile deployment. Although the number of parameters is only 2B, it performs well in image, video and multi-language understanding.

Model link:

Qwen2-VL-2B-Instruct:https://www.modelscope.cn/models/qwen/Qwen2-VL-2B-Instruct

Qwen2-VL-7B-Instruct:https://www.modelscope.cn/models/qwen/Qwen2-VL-7B-Instruct

The update of the Qwen2-VL model marks a new breakthrough in multi-modal large-scale language model technology. Its powerful capabilities in image, video and multi-language processing provide broad prospects for future applications. The launch of two versions, 7B and 2B, also provides more flexible options for different application scenarios.