The rapid development of generative AI, especially the GPT series of models, has brought new possibilities to many fields. From the significant improvement in bar exam scores to its application in the business field, GPT-4 has demonstrated its powerful capabilities. However, some industry experts have questioned the understanding ability and reliability of current generative AI, believing that its reasoning ability and understanding of the world are still insufficient, and future development still faces many challenges. The editor of Downcodes will take you to deeply explore the future development direction of generative AI, as well as the opportunities and challenges faced by the domestic generative AI industry.

Where is the future development direction of generative AI?

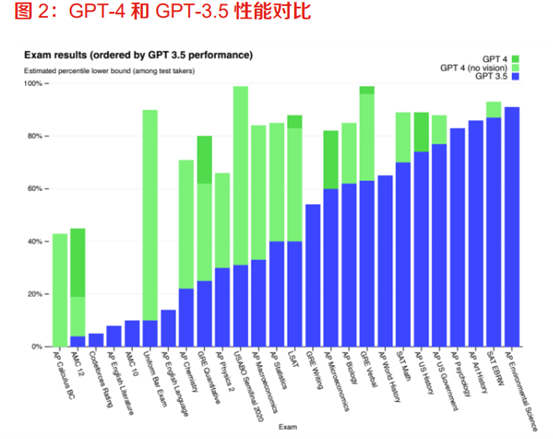

It is undeniable that the current GPT-4 has been greatly improved in many capabilities. Compared with GPT-3.5, GPT-4's performance in complex professional fields has been greatly improved, and its logical reasoning ability is also stronger. In the U.S. Bar Qualification Examination, GPT-4's score can reach the top 10%, but GPT-4 3.5 can only reach the bottom 10% level.

Image source: Open AI

The substantial improvement in capabilities also allows Chat GPT to open up more usage scenarios. At present, Open AI officially provides several major application scenarios, such as adding AI to Duolingo to chat with users on a daily basis to accelerate users’ language learning; Morgan Stanley uses GPT-4 to manage its knowledge base to help employees Quickly access the content you want.

However, many big names have doubts about GPT’s current capabilities. Stuart Russell pointed out in his speech that Chat GPT and GPT-4 do not understand the world, nor are they "answering" questions. The current large language model is just a piece of the puzzle. What is currently missing from this puzzle and what it will eventually look like. , these are uncertain. Many shortcomings in capabilities also determine that there is still a long way to go to develop general artificial intelligence. Due to various doubts about the capabilities of GPT-4, Stuart Russell was also modifying the PPT during Sam Altman's speech.

Image source: Wisdom Conference

Also holding the same view as Stuart Russell is Yang Likun, winner of the Turing Award, one of the "Big Three of Deep Learning" and chief artificial intelligence scientist at Meta. He believes that the current autoregressive model of GPT lacks planning, resulting in its reasoning ability It is currently not possible. If a large language model based on probability is generated, it is essentially impossible to solve the problem of errors. When the input text increases, the probability of errors will also increase exponentially.

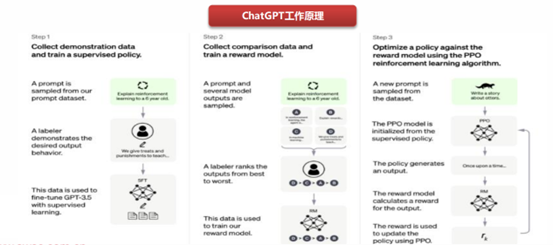

In fact, the two big guys’ accusations against GPT are not unreasonable. Because the RLHF algorithm used by Chat GPT itself relies on human perception, it allows the model to judge the quality of its own answers and train itself to gradually give higher-quality answers. If you want to improve the model's reasoning ability, you need to supplement a large number of parameters in the database and continuously iterate the algorithm.

Source: Southwest Securities

However, the existence of various risks also prevents many generative AI companies from trying easily. If generative AI can achieve the same story reasoning ability and character emotion creation ability as a novel writer, will this make generative AI completely out of human control? While this is causing global panic, will it also encounter strong supervision from local governments, causing previous investments in generative AI to be in vain?

Regarding the future development direction of generative AI, Yang Likun’s answer is world model. This world model is not only a model that imitates the human brain at the neural level, but also a world model that completely fits the human brain partitions in terms of cognitive modules. The biggest difference between it and the large language model is that it can have planning and prediction capabilities (world model) and costing capabilities (cost module).

With the help of the world model, we can better understand the world and predict and plan the future. Through the cost accounting module, combined with a simple demand (the future must be planned according to the logic that saves the most action costs), it can eliminate all potential poisons and unnecessary consequences. reliability.

Image source: Wisdom Conference

But the problem is the parameters, algorithms, costs, etc. of the world model during training. Yang Likun simply gave some strategic ideas. For example, using self-supervised models to train and establish multi-level thinking models, etc., but Yang Likun was unable to give a complete plan on how to implement it.

Other participants did not share their views on the future development direction of generative AI. Therefore, subsequent generative AI will still maintain a situation where each company "does its own thing", and globally unified generative AI may only stay in the laboratory stage.

03. Domestic generative AI prediction

Professor Huang Tiejun, President of Zhiyuan Research Institute, said in an interview with the media after the meeting that the current problem with domestic generative AI large models is that the industry is overheated, but the training data is too small, and the tens of billions of models are only just emerging. Although there are some technical capabilities among them, due to repetitive efforts, while industry resources are becoming more dispersed, there is still a certain gap between their intelligence level and large foreign generative AI models.

As Professor Huang Tiejun said, take the "Tongyi Qianwen" large model owned by Alibaba as an example, because the data used for training this large model is a large number of Chinese conversations and conversations extracted from Alibaba's Taobao, Alipay, Tmall and other industries. The amount of pre-training data for text data, as well as text data from some other sources, is about 200 billion words, equivalent to 14TB of text data.

The training data volume of Chat GPT is about 4.5 billion words, which is equivalent to 300GB of text data. The training data is relatively small, so Alibaba's "Tongyi Qianwen" also lacks multi-modal capabilities. In terms of text, both are far behind GPT-4.

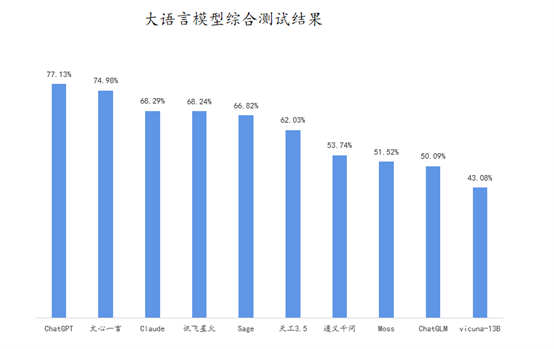

According to data from the "Large Language Model Comprehensive Capability Evaluation Report 2023" released by the InfoQ Research Center, Chat GPT currently leads other domestic large model manufacturers with a comprehensive score of 77.13%.

Source: "Large Language Model Comprehensive Ability Evaluation Report 2023"

At the same time, Professor Huang Tiejun also pointed out that today's large models are an intermediate product of technological iteration. With the subsequent development of the domestic large model industry, the reasonable number of large model ecosystems that can survive in the future is about three.

As Professor Huang Tiejun said, Ma Huateng pointed out at a high-level meeting within Tencent that the dividends from the C-end market will disappear in the next ten years. The entire hope lies in the ToB-end market, and the second half of the Internet belongs to the industrial Internet. Ali Business Research Institute has previously pointed out that the next ten years will be a golden opportunity for the transformation of traditional enterprises.

However, from the perspective of the ToB market, taking the SaaS market that has been developing for many years as a reference, if a large model wants to truly open the ToB market, its core must be to bring the value of "cost reduction and efficiency increase" to customers, especially in the current era of uncertainty. This is especially true when a few industries still remain on the sidelines on large models. Mainly based on traditional manufacturing industries, the current common problems encountered by small and medium-sized traditional manufacturing industries are reduced orders, the industry has been fighting in price wars, and downstream payment collection cycles have become longer. Many small and medium-sized manufacturing industries are currently struggling to support themselves. In order to avoid high trial and error costs, many small and medium-sized manufacturing companies naturally do not dare to easily try the use of large models.

And judging from the development history of the SaaS industry, the domestic SaaS industry ushered in a growth peak in 2015 after experiencing 10 years of calm since early 2004. Since the outbreak of the epidemic in 2020, the epidemic has accelerated the digital transformation of enterprises, and the domestic SaaS market has entered a critical growth period. But even so, the current domestic SaaS industry ecosystem is not yet complete and the market is not yet mature.

Image source: Flash Cloud

Obviously, the opening of the TOB market for large models does not happen overnight, but is an extremely slow process. Moreover, the costs incurred by algorithms, computing power, and data during model iteration, including the subsequent launch of various functions, require large model companies to continuously invest high amounts of funds.

Commercialization takes a long time, capital investment is high, and it is difficult to make profits in the short term. In the future, companies that lack cash flow will have no choice but to shut down their own large models in the face of financial pressure, and industry resources will become even more difficult. Concentrate on manufacturers of large head models.

Judging from the experience of many industries such as online ride-hailing and food delivery, after many years of turmoil in an emerging industry, only about three companies can really develop in the future, and many other companies have been submerged in history. in the long river.

All in all, generative AI is in a stage of rapid development, with both opportunities and challenges. In the future, world models may become an important development direction, but their implementation still faces many technical and commercial problems. The domestic generative AI industry also needs to solve problems such as data scale and commercialization in order to stand out in the fierce competition. The editor of Downcodes will continue to pay attention to the trends in this field and bring more exciting content to readers.