A new MIT study has revealed striking similarities between the internal structure of large language models (LLMs) and the human brain, sparking a heated debate in the field of artificial intelligence. The editor of Downcodes will explain in detail the breakthrough findings of this research and its significance to the future development of AI. Through in-depth analysis of the LLM activation space, the researchers discovered three-level structural features. The discovery of these features will help us better understand the working mechanism of LLM and provide new directions for the development of future AI technology. .

AI has actually started to "grow a brain"?! The latest research from MIT shows that the internal structure of a large language model (LLM) is surprisingly similar to the human brain!

This study used sparse autoencoder technology to conduct an in-depth analysis of the activation space of LLM and discovered three levels of structural features, which are amazing:

First, at the microscopic level, the researchers discovered the existence of "crystalline"-like structures. The faces of these "crystals" are made up of parallelograms or trapezoids, similar to familiar word analogies, such as "man:woman::king:queen."

What's even more surprising is that these "crystalline" structures become clearer after removing some irrelevant interference factors (such as word length) through linear discriminant analysis techniques.

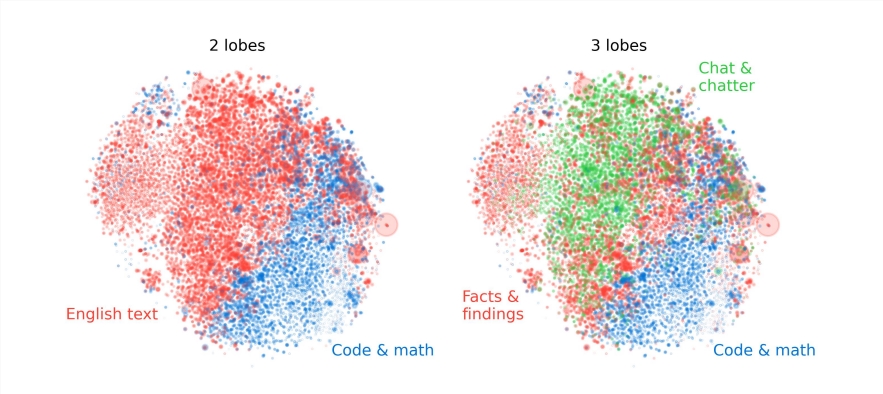

Secondly, at the meso level, researchers found that the activation space of LLM has a modular structure similar to the functional divisions of the human brain.

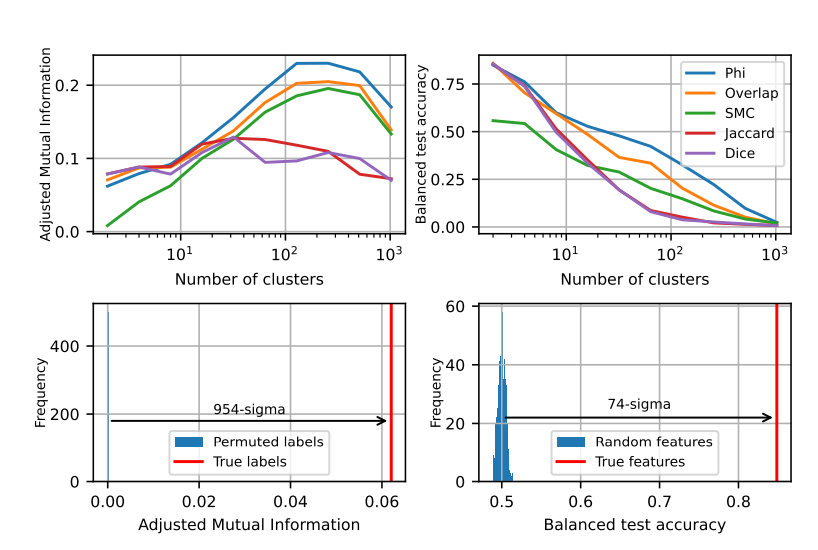

For example, features related to math and coding cluster together to form a "lobe" similar to the functional lobes of the human brain. Through quantitative analysis of multiple indicators, the researchers confirmed the spatial locality of these "lobes," showing that co-occurring features are also more spatially clustered, far beyond what would be expected from a random distribution.

At the macro level, the researchers found that the overall structure of the LLM feature point cloud is not isotropic, but presents a power-law eigenvalue distribution, and this distribution is most obvious in the middle layer.

The researchers also quantitatively analyzed the clustering entropy of different levels and found that the clustering entropy of the middle layer was lower, indicating that the feature representation was more concentrated, while the clustering entropy of the early and late layers was higher, indicating that the feature representation was more dispersed.

This research provides us with a new perspective on understanding the internal mechanisms of large language models, and also lays the foundation for the development of more powerful and intelligent AI systems in the future.

This research result is exciting. It not only deepens our understanding of large-scale language models, but also points out a new direction for the future development of artificial intelligence. The editor of Downcodes believes that with the continuous advancement of technology, artificial intelligence will show its strong potential in more fields and bring a better future to human society.