This repository fine-tunes a state of the art PII detection system and enhances performance with synthetic PII data generation.

Introduction • Highlights • Synthetic PII Data • PII Entity Detection Systems • Issues •

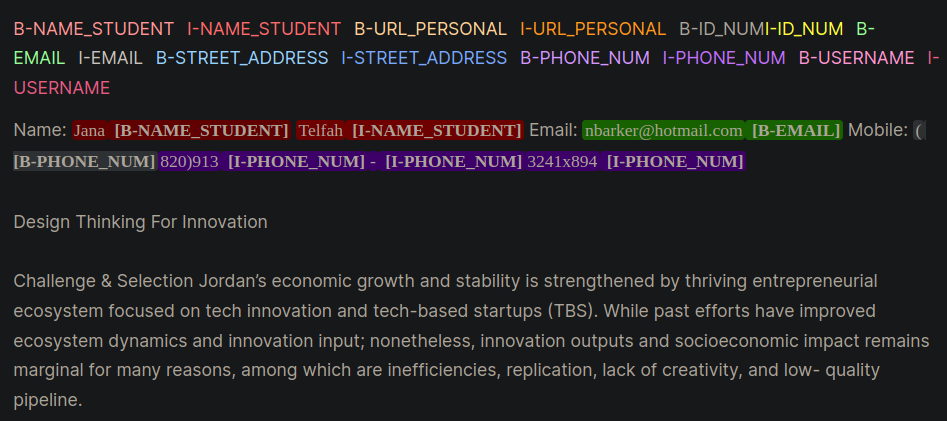

Personal identifiable information (PII) is sensitive data used to identify, locate, or contact an individual. PII entity detection systems can identify, categorize, and redact sensitive information in unstructured text. Improving PII detection systems help maintain the privacy and security of individuals, comply with legal and regulatory requirements, and prevent identity theft, fraud, or other types of harm. Figure 1 provides an example PII entities using inside, outside, beginning (IOB) format.

Figure 1: Example of PII Data in IOB Format [Source].

The work in this repository was derived during the Kaggle competition The Learning Agency Lab - PII Data Detection. Using the techniques in this repository will provide solutions in the Top 1% for the competition.

PII PlaceHolders instead of directly putting PII data into a prompt.class_weights parameter in the Hugging Face Trainer and with a either a Focal Loss or Cross Entropy Loss.The IOB format, also commonly referred to as the BIO format, is a common tagging format for tagging tokens in a chunking task such as Named Entity Recognition (NER) applications. Creating labeled BIO datasets can be time and labor intensive for domain specific datasets. An alternative approach is to synthetically generate PII datasets that closely represent your real-life application. Refer to the gen-data directory for code to create domain specific PII data. The below files would be executed sequentially because they each represent a different task in the synthetic PII data creation.

Synthetic PII data was created using Faker and custom functions to create PII information. This data was placed into LLM generated unstructured text that was created in the next step.

Generative LLMs (e.g., Llama3) were used to generate unstructured text that resembles domain specific text. In this repository the data mimics essays from students in an online course. Refer to the various prompts for prompting examples used in this work.

NOTICE: a helpful insight learned during this work was to prompt the LLM to create placeholders for the PII data. The data from Step #1 will be injected into the placeholders. The PII placeholder approach provided the following anecdotal benefits:

Two examples of prompting strategys are given which demonstrate the differences between prompting with PII directly (Example 1) and then with placeholders (Example 2).

Example 1: LLM Prompting with Direct PII Data Injection

Model Prompt:

Write an sentence introducing yourself and include only your personal information provided below:

- FULL_NAME: John Doe

- PHONE_NUM: 555-123-4567

Model Response:

My name is John Doe and you can reach me at 555-125-4567 or by email at [email protected].This prompting technique routinely introduced a few mistakes from the LLMs that were difficult to programmatically recognize and lead to mis-labeling of PII in the BIO format. Example 1 asks the model to directly insert PII data into the text and notice the few errors:

[email protected] email.Example 2: LLM Prompting with PII PlaceHolders

Model Prompt:

Write an sentence introducing yourself and include only your personal information using the placeholders provided below:

- {FULL_NAME}: First and Last Name

- {PHONE_NUM}: Personal phone number

Model Response:

My name is {FULL_NAME} and you can reach me at {PHONE_NUM}.With Example 2 the LLM did not have the opportunity to transpose any errors in the PHONE_NUM or with the FULL_NAME. Also, it seemed the LLM would less frequently incorporate extra PII entities.

The above two steps decoupled PII data and domain specific text generation. In Step #3 the Step #1 PII data is inserted into Step #2's LLM domain specific generated text. This is useful because you can easily experiment with different combinations of PII data and domain specific text generation data.

The best performing LLM model for PII entity detection was Microsoft's Decoding-enhanced BERT with Disentangled Attention V3 model. This model consistently performs well for encoder model tasks such as named entity recognition (NER), question and answer, and classification.

A good starting point for training a Deberta-V3 model is with the Baseline Deberta-V3 Fine-Tuning module. In this module a custom Hugging Face Trainer was created to train with either Focal Loss or CE loss to account for class imbalance.

class CustomTrainer(Trainer):

def __init__(

self,

focal_loss_info: SimpleNamespace,

*args,

class_weights=None,

**kwargs):

super().__init__(*args, **kwargs)

# Assuming class_weights is a Tensor of weights for each class

self.class_weights = class_weights

self.focal_loss_info = focal_loss_info

def compute_loss(self, model, inputs, return_outputs=False):

# Extract labels

labels = inputs.pop("labels")

# Forward pass

outputs = model(**inputs)

logits = outputs.logits

# Loss calculation

if self.focal_loss_info.apply:

loss_fct = FocalLoss(alpha=5, gamma=2, reduction='mean')

loss = loss_fct(logits.view(-1, self.model.config.num_labels),

labels.view(-1))

else:

loss_fct = CrossEntropyLoss(weight=self.class_weights)

if self.label_smoother is not None and "labels" in inputs:

loss = self.label_smoother(outputs, inputs)

else:

loss = loss_fct(logits.view(-1, self.model.config.num_labels),

labels.view(-1))

return (loss, outputs) if return_outputs else lossFurther tricks and tips to help fine-tune PII detection systems that are contained in the training directory are:

unlabeled datasets to expose a model to domain-specific language patterns and terminology. Fine-tuning a model that underwent additional pre-training on a specific task or domain, beginning with an initial checkpoint tailored for the task and data distribution at hand, typically yields better performance compared to fine-tuning models that start from a generic initial checkpoint [Sources: 1, 2].NOTE: This workflow presented here can be adapted for many Hugging Face deep learning applications, not just LLMs.

This repository is will do its best to be maintained. If you face any issue or want to make improvements please raise an issue or submit a Pull Request. ?