Aha Natural Language Processing Package integrates HanLP , Word2Vec , Mate-Tools and other projects, providing high-quality Chinese natural language processing services.

The current functions provided by AHANLP are:

The latest version of ahanlp.jar and the corresponding basic data packet AHANLP_base , click here to download. The configuration files ahanlp.properties and hanlp.properties are put into classpath. For most projects, you only need to put them in the src directory. The IDE will automatically copy them to the classpath during compilation.

AHANLP follows HanLP's data organization structure, code and data separation. Users can choose corresponding data packets to download according to their needs:

dictionary directory and model directory are stored in the project's data/ directory.data/model/ directory.python -m pip install xxx.whl after downloading). Then download word_cloud (extract code 9jb6) and place the extracted word_cloud folder in the project root directory.All parameters in the AHANLP project are read from the configuration file (user modification is not recommended), and the following is a brief description.

The main configuration file is ahanlp.properties , and the Word2Vec model path needs to be configured, and the default is

word2vecModel = data/model/wiki_chinese_word2vec(Google).model

hanLDAModel = data/model/SogouCS_LDA.model

srlTaggerModel = data/model/srl/CoNLL2009-ST-Chinese-ALL.anna-3.3.postagger.model

srlParserModel = data/model/srl/CoNLL2009-ST-Chinese-ALL.anna-3.3.parser.model

srlModel = data/model/srl/CoNLL2009-ST-Chinese-ALL.anna-3.3.srl-4.1.srl.model

wordCloudPath = word_cloud/

pythonCMD = python

The HanLP configuration file is hanlp.properties . You only need to set the path where the data directory is located on the first line. The default is

root=./

Note: The semantic role labeling module has a high memory footprint. If you want to use this feature, please set the maximum memory footprint of the JVM to 4GB.

Almost all functions of AHANLP can be called quickly through the tool class AHANLP . It is also recommended that users always call through the tool class AHANLP, so that after AHANLP is upgraded in the future, users do not need to modify the calling code.

All demos are located under test.demo, covering more details than the introduction document below. It is highly recommended to run it once, and you can also check it out:

AHANLP interface documentation .

String content = "苏州大学的周国栋教授正在教授自然语言处理课程。" ;

// 标准分词

List < Term > stdSegResult = AHANLP . StandardSegment ( content );

System . out . println ( stdSegResult );

//[苏州大学/ntu, 的/ude1, 周/qt, 国栋/nz, 教授/nnt, 正在/d, 教授/nnt, 自然语言处理/nz, 课程/n, 。/w]

// NLP分词

List < Term > nlpSegResult = AHANLP . NLPSegment ( content );

System . out . println ( nlpSegResult );

//[苏州大学/ntu, 的/u, 周国栋/nr, 教授/n, 正在/d, 教授/v, 自然语言处理/nz, 课程/n, 。/w]Standard Segment encapsulates the HMM-Bigram model and uses the shortest-circuit method to divide the word (the shortest-circuit solution uses the Viterbi algorithm), taking into account both efficiency and effect. NLP participle (NLPSegment) encapsulates the perceptron model, supported by the structured perceptron sequence labeling framework, and performs part-of-speech annotation and naming entity recognition at the same time, with higher accuracy and suitable for use in the production environment.

The default return result of word participle contains word and part of speech. You can obtain the word or part of speech list by AHANLP.getWordList(stdSegResult) and AHANLP.getNatureList(stdSegResult) respectively. For part-of-speech annotation, please refer to the "HanLP part-of-speech annotation collection".

If you need to customize part-of-speech filtering, you can use the me.xiaosheng.chnlp.seg.POSFilter class, which also implements common methods such as filtering punctuation and retaining real words.

// 过滤标点

POSFilter . removePunc ( stdSegResult );

// 保留名词

POSFilter . selectPOS ( stdSegResult , Arrays . asList ( "n" , "ns" , "nr" , "nt" , "nz" ));The word participle above supports filtering of stop words, just bring the second parameter and set it to true.

List < Term > stdSegResult = AHANLP . NLPSegment ( content , true );

List < String > stdWordList = AHANLP . getWordList ( stdSegResult );

System . out . println ( stdWordList );

//[苏州大学, 周国栋, 教授, 正在, 教授, 自然语言处理, 课程] Word segmentation supports user-defined dictionary. HanLP uses the CustomDictionary class to represent it. It is a global user-defined dictionary that affects all vocabulary machines. The dictionary text path is data/dictionary/custom/CustomDictionary.txt , where users can add their own words (not recommended). You can also create a new text file separately and append the dictionary through the configuration file CustomDictionaryPath=data/dictionary/custom/CustomDictionary.txt; 我的词典.txt; (recommended).

Dictionary format:

[单词] [词性A] [A的频次] [词性B] [B的频次] ... If part of speech is not written, it means that the default part of speech is adopted in the dictionary.全国地名大全.txt ns; if the space followed by the part of speech is immediately followed by the part of speech, the dictionary defaults to the part of speech.**Note: **The program reads dictionary from cache files (filename.txt.bin or filename.txt.trie.dat and filename.txt.trie.value) by default. If you modify any dictionary, only deleting the cache will take effect.

List < List < Term >> segResults = AHANLP . seg2sentence ( "Standard" , content , true );

for ( int i = 0 ; i < senList . size (); i ++)

System . out . println (( i + 1 ) + " : " + AHANLP . getWordList ( results . get ( i )));Partitioning words by sentences on long text is also a common use, and returns a list of results composed of all sentences of word participle. The type of word segmenter is controlled through the first segType parameter: "Standard" corresponds to the standard word segment, and "NLP" corresponds to the NLP participle.

Of course, you can also manually break sentences and then divide them into multiple sentences.

List < String > senList = AHANLP . splitSentence ( content );

List < List < Term >> senWordList = AHANLP . splitWordInSentences ( "Standard" , senList , true );

for ( int i = 0 ; i < senWordList . size (); i ++)

System . out . println (( i + 1 ) + " : " + senWordList . get ( i )); String sentence = "2013年9月,习近平出席上合组织比什凯克峰会和二十国集团圣彼得堡峰会,"

+ "并对哈萨克斯坦等中亚4国进行国事访问。在“一带一路”建设中,这次重大外交行程注定要被历史铭记。" ;

List < NERTerm > NERResult = AHANLP . NER ( sentence );

System . out . println ( NERResult );

//[中亚/loc, 习近平/per, 上合组织/org, 二十国集团/org, 哈萨克斯坦/loc, 比什凯克/loc, 圣彼得堡/loc, 2013年9月/time] The perceptron and CRF models are encapsulated, supported by the structured perceptron sequence labeling framework, and the time, place name, person name, and organization name in the text are identified: person name per , place name loc , organization name org , and time .

String sentence = "北京是中国的首都" ;

CoNLLSentence deps = AHANLP . DependencyParse ( sentence );

for ( CoNLLWord dep : deps )

System . out . printf ( "%s --(%s)--> %s n " , dep . LEMMA , dep . DEPREL , dep . HEAD . LEMMA );

/*

北京 --(主谓关系)--> 是

是 --(核心关系)--> ##核心##

中国 --(定中关系)--> 首都

的 --(右附加关系)--> 中国

首都 --(动宾关系)--> 是

*/

// 词语依存路径

List < List < Term >> wordPaths = AHANLP . getWordPathsInDST ( sentence );

for ( List < Term > wordPath : wordPaths ) {

System . out . println ( wordPath . get ( 0 ). word + " : " + AHANLP . getWordList ( wordPath ));

}

/*

北京 : [北京, 是]

是 : [是]

中国 : [中国, 首都, 是]

的 : [的, 中国, 首都, 是]

首都 : [首都, 是]

*/

// 依存句法树前2层的词语

List < String > words = AHANLP . getTopWordsInDST ( sentence , 1 );

System . out . println ( words );

// [北京, 是, 首都] Dependency syntax analysis is an encapsulation of NeuralNetworkDependencyParser in HanLP, using a high-performance dependency syntax analyzer based on neural networks. The analysis results are in CoNLL format, and you can iterate according to the CoNLLWord type. As shown above, CoNLLWord.LEMMA is a slave word, CoNLLWord.HEAD.LEMMA is the dominant word, and CoNLLWord.DEPREL is the dependent label, and defaults to the Chinese label.

If you need to use an English label, just bring the second parameter and set it to true

CoNLLSentence enDeps = AHANLP.DependencyParse(sentence, true);

For detailed descriptions of dependency tags, please refer to "Dependency Tags".

String document = "我国第二艘航空母舰下水仪式26日上午在中国船舶重工集团公司大连造船厂举行。航空母舰在拖曳牵引下缓缓移出船坞,停靠码头。目前,航空母舰主船体完成建造,动力、电力等主要系统设备安装到位。" ;

List < String > wordList = AHANLP . extractKeyword ( document , 5 );

System . out . println ( wordList );

//[航空母舰, 动力, 建造, 牵引, 完成]The extractKeyword function sets the number of keywords returned through the second parameter. The Rank value of each word is calculated internally through the TextRank algorithm, and arranged it in descending order of the Rank value, extracting the previous few as keywords. For specific principles, please refer to "TextRank Algorithm Extraction Keywords and Abstracts".

String document = "我国第二艘航空母舰下水仪式26日上午在中国船舶重工集团公司大连造船厂举行。"

+ "按照国际惯例,剪彩后进行“掷瓶礼”。随着一瓶香槟酒摔碎舰艏,两舷喷射绚丽彩带,周边船舶一起鸣响汽笛,全场响起热烈掌声。"

+ "航空母舰在拖曳牵引下缓缓移出船坞,停靠码头。第二艘航空母舰由我国自行研制,2013年11月开工,2015年3月开始坞内建造。" + "目前,航空母舰主船体完成建造,动力、电力等主要系统设备安装到位。"

+ "出坞下水是航空母舰建设的重大节点之一,标志着我国自主设计建造航空母舰取得重大阶段性成果。" + "下一步,该航空母舰将按计划进行系统设备调试和舾装施工,并全面开展系泊试验。" ;

List < String > senList = AHANLP . extractKeySentence ( document , 5 );

System . out . println ( "Key Sentences: " );

for ( int i = 0 ; i < senList . size (); i ++)

System . out . println (( i + 1 ) + " : " + senList . get ( i ));

System . out . println ( "Summary: " );

System . out . println ( AHANLP . extractSummary ( document , 50 ));

/*

Key Sentences:

1 : 航空母舰主船体完成建造

2 : 该航空母舰将按计划进行系统设备调试和舾装施工

3 : 我国第二艘航空母舰下水仪式26日上午在中国船舶重工集团公司大连造船厂举行

4 : 标志着我国自主设计建造航空母舰取得重大阶段性成果

5 : 出坞下水是航空母舰建设的重大节点之一

Summary:

我国第二艘航空母舰下水仪式26日上午在中国船舶重工集团公司大连造船厂举行。航空母舰主船体完成建造。

*/The extractKeySentence function is responsible for extracting key sentences, and the second parameter controls the number of key sentences extracted. The extractSummary function is responsible for automatic summary, and the second parameter sets the upper limit of the summary word count. The Rank value of each sentence is calculated internally through the TextRank algorithm, and arranged it in descending order of the Rank value, extracting the previous few as key sentences. The automatic summary is similar, and also considers the position of the sentence in the original text and the length of the sentence. For specific principles, please refer to "TextRank Algorithm Extraction Keywords and Abstracts".

The degree of similarity between sentences (i.e., the weights in the TextRank algorithm) is calculated using the functions provided by Word2Vec. The model trained in Wikipedia's Chinese corpus is used by default, and a custom model can also be used.

System . out . println ( "猫 | 狗 : " + AHANLP . wordSimilarity ( "猫" , "狗" ));

System . out . println ( "计算机 | 电脑 : " + AHANLP . wordSimilarity ( "计算机" , "电脑" ));

System . out . println ( "计算机 | 男人 : " + AHANLP . wordSimilarity ( "计算机" , "男人" ));

String s1 = "苏州有多条公路正在施工,造成局部地区汽车行驶非常缓慢。" ;

String s2 = "苏州最近有多条公路在施工,导致部分地区交通拥堵,汽车难以通行。" ;

String s3 = "苏州是一座美丽的城市,四季分明,雨量充沛。" ;

System . out . println ( "s1 | s1 : " + AHANLP . sentenceSimilarity ( s1 , s1 ));

System . out . println ( "s1 | s2 : " + AHANLP . sentenceSimilarity ( s1 , s2 ));

System . out . println ( "s1 | s3 : " + AHANLP . sentenceSimilarity ( s1 , s3 ));

/*

猫 | 狗 : 0.71021223

计算机 | 电脑 : 0.64130974

计算机 | 男人 : -0.013071457

s1 | s1 : 1.0

s1 | s2 : 0.6512632

s1 | s3 : 0.3648093

*/ wordSimilarity and sentenceSimilarity are functions that calculate the similarity of words and sentences respectively. The calculation process uses the word vectors pre-trained by the Word2Vec model. Before use, you need to download the word2vec model, and then store the decompressed model file in the project's data/model/ directory. Word similarity is directly calculated by calculating the word vector cosine value. You can refer to Word2Vec/issues1 for the way to obtain sentence similarity. If you want to train Word2Vec model yourself, you can refer to training Google version of the model.

Note: sentenceSimilarity uses standard word segmentation to segment sentences by default and filters out stop words.

String sentence = "全球最大石油生产商沙特阿美(Saudi Aramco)周三(7月21日)证实,公司的一些文件遭泄露。" ;

List < SRLPredicate > predicateList = AHANLP . SRL ( sentence );

for ( SRLPredicate p : predicateList ) {

System . out . print ( "谓词: " + p . getPredicate ());

System . out . print ( " t t句内偏移量: " + p . getLocalOffset ());

System . out . print ( " t句内索引: [" + p . getLocalIdxs ()[ 0 ] + ", " + p . getLocalIdxs ()[ 1 ] + "] n " );

for ( Arg arg : p . getArguments ()) {

System . out . print ( " t " + arg . getLabel () + ": " + arg . getSpan ());

System . out . print ( " t t句内偏移量: " + arg . getLocalOffset ());

System . out . print ( " t句内索引: [" + arg . getLocalIdxs ()[ 0 ] + ", " + arg . getLocalIdxs ()[ 1 ] + "] n " );

}

System . out . println ();

}

/*

谓词: 证实 句内偏移量: 36 句内索引: [36, 37]

TMP: 周三(7月21日) 句内偏移量: 27 句内索引: [27, 35]

A0: 全球最大石油生产商沙特阿美(Saudi Aramco) 句内偏移量: 0 句内索引: [0, 26]

A1: 公司的一些文件遭泄露 句内偏移量: 39 句内索引: [39, 48]

谓词: 遭 句内偏移量: 46 句内索引: [46, 46]

A0: 公司的一些文件 句内偏移量: 39 句内索引: [39, 45]

A1: 泄露 句内偏移量: 47 句内索引: [47, 48]

*/ The SRL component in Mate-Tools (including part-of-speech recognizer and dependent syntax analyzer) can identify the predicate in the text and the corresponding arguments: agent A0 , recipient A1 , time TMP and location LOC . Added support for long text SRLParseContent(content) and matching text offsets, which can be positioned through the position and offsets of predicates and arguments in the text.

Note: Each predicate corresponds to more than one argument in a certain category, for example, the predicate "attack" can correspond to multiple activators A0 (attacker).

int topicNum510 = AHANLP . topicInference ( "data/mini/军事_510.txt" );

System . out . println ( "军事_510.txt 最可能的主题号为: " + topicNum510 );

int topicNum610 = AHANLP . topicInference ( "data/mini/军事_610.txt" );

System . out . println ( "军事_610.txt 最可能的主题号为: " + topicNum610 );LDA Topic Prediction By default, the news LDA model is used to predict topics, and the topic number with the maximum probability is output. The default model is trained from 1,000 (maybe dissatisfied) news from each category in Sogou News Corpus (SogouCS), with a total of 100 topics.

Users can also train the LDA model by themselves through ZHNLP.trainLDAModel() . After training, they can use the overloaded topicInference method to load it.

AHANLP . trainLDAModel ( "data/mini/" , 10 , "data/model/testLDA.model" );

int topicNum710 = AHANLP . topicInference ( "data/model/testLDA.model" , "data/mini/军事_710.txt" );

System . out . println ( "军事_710.txt 最可能的主题号为: " + topicNum710 );

int topicNum810 = AHANLP . topicInference ( "data/model/testLDA.model" , "data/mini/军事_810.txt" );

System . out . println ( "军事_810.txt 最可能的主题号为: " + topicNum810 ); If you need to run LDADemo.java for testing, you also need to download SogouCA_mini and store the extracted mini folder in the project's data/ directory.

String tc = AHANLP . convertSC2TC ( "用笔记本电脑写程序" );

System . out . println ( tc );

String sc = AHANLP . convertTC2SC ( "「以後等妳當上皇后,就能買士多啤梨慶祝了」" );

System . out . println ( sc );

/*

用筆記本電腦寫程序

“以后等你当上皇后,就能买草莓庆祝了”

*/ The simple and traditional Chinese conversion is a wrapper to convertToTraditionalChinese and convertToSimplifiedChinese methods in HanLP. It can identify words that are divided between simple and traditional Chinese, such as打印机=印表機; and many characters that cannot be distinguished by simple and traditional Chinese conversion tools, such as the two "年" in "年" and "Queen".

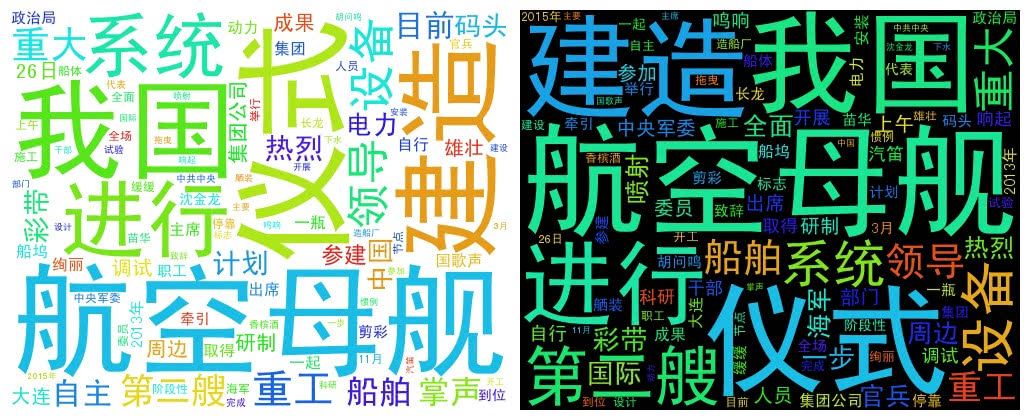

String document = "我国第二艘航空母舰下水仪式26日上午在中国船舶重工集团公司大连造船厂举行。" + "中共中央政治局委员、中央军委副主席范长龙出席仪式并致辞。9时许,仪式在雄壮的国歌声中开始。"

+ "按照国际惯例,剪彩后进行“掷瓶礼”。随着一瓶香槟酒摔碎舰艏,两舷喷射绚丽彩带,周边船舶一起鸣响汽笛,全场响起热烈掌声。"

+ "航空母舰在拖曳牵引下缓缓移出船坞,停靠码头。第二艘航空母舰由我国自行研制,2013年11月开工,2015年3月开始坞内建造。" + "目前,航空母舰主船体完成建造,动力、电力等主要系统设备安装到位。"

+ "出坞下水是航空母舰建设的重大节点之一,标志着我国自主设计建造航空母舰取得重大阶段性成果。" + "下一步,该航空母舰将按计划进行系统设备调试和舾装施工,并全面开展系泊试验。"

+ "海军、中船重工集团领导沈金龙、苗华、胡问鸣以及军地有关部门领导和科研人员、干部职工、参建官兵代表等参加仪式。" ;

List < String > wordList = AHANLP . getWordList ( AHANLP . StandardSegment ( document , true ));

WordCloud wc = new WordCloud ( wordList );

try {

wc . createImage ( "D: \ test.png" );

} catch ( IOException e ) {

e . printStackTrace ();

} WordCloud uses a word list to create a WordCloud object, then calls createImage method to create a word cloud image, and passes the saved address of the image as a parameter. The word cloud draws the size of each word according to the word frequency. The higher the word frequency, the larger the word; the color and position are generated randomly.

The default generated image size is 500x400 and the background color is white. You can also customize the background color and image size of the image

WordCloud wc = new WordCloud ( wordList );

wc . createImage ( "D: \ test_black.png" , true ); // 黑色背景

wc . createImage ( "D: \ test_1000x800.png" , 1000 , 800 ); // 尺寸 1000x800

wc . createImage ( "D: \ test_1000x800_black.png" , 1000 , 800 , true ); // 尺寸 1000x800, 黑色背景I would like to pay tribute to the authors of the following projects, which are these open source software that promote the popularization and application of natural language processing technology.