Summarize basic knowledge of embedded systems, programming languages, efficient tools and other contents.

Recently I heard a friend say something very good:

iPhone is an embedded ARM running on OS, and CUDA can also be understood as a heterogeneous embedded.

From this perspective, how can you become an excellent embedded engineer by separating computer science from embedded, and simply referring to technologies such as microcontrollers, ARM, FPGAs, and learning only narrow technical fields?

Technical documentation and study records:

Embedded system basics:

Machine Learning:

programming language:

Environment construction and tools:

Theoretical basis:

This warehouse will update knowledge related to the embedded field for a long time. Part of the content is the author's study notes and summary of experience, part of it is common skills in daily work, and the embedded knowledge collected through various methods. By summarizing and refining the knowledge you have, you can constantly learn more useful skills.

Recently I have a new view on the positioning of embedded engineers. Embedded technology is a branch of the computer science system.

Graduates of electronics majors start learning from the machine level, such as microcontrollers and microcomputer principles, and then go to the language level, such as C language and Python, and then learn data structures and algorithms. This route looks pretty good and is suitable for getting started, but there are serious problems in this route. You will find it difficult to know the truth and why.

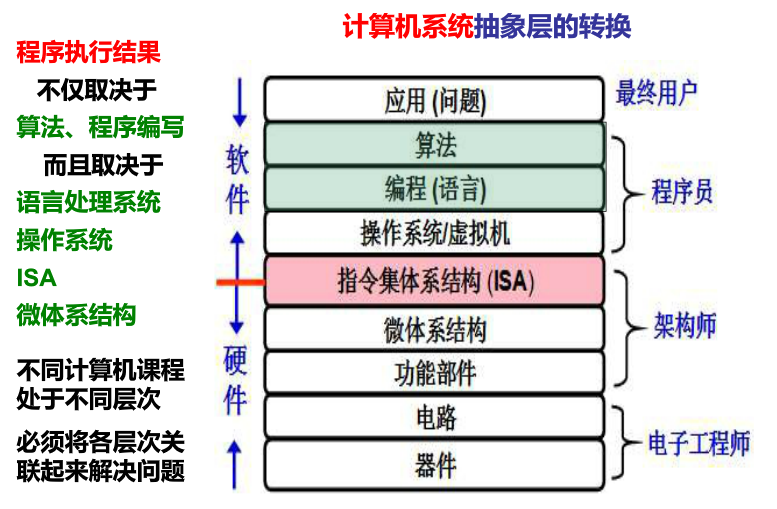

The problems encountered in embedded work are often comprehensive, which means that starting from the language level or algorithm level often cannot solve the problem, and sometimes it needs to go deep into the machine level. So the question is, what levels are there for the entire embedded system, or what levels are there for the entire computer system? To have a understanding of the entire knowledge system framework and your position, you need to have a deeper understanding of the composition principles of the computer.

The book I recommend here is the third edition of "In-depth Understanding of Computer Systems" written by Randal E. Bryant and David R. O'Hallaron. The corresponding course that can be found is the "Basics of Computer Systems" course taught by Professor Yuan Chunfeng on the MOOC platform, which helps us to establish an understanding of the entire computer system abstraction layer and enhance our comprehensive ability to solve embedded problems.

Embedded engineers must have sufficient depth in their technical accumulation.

After several years of engineering development, I have come into contact with various processors and designed and implemented a simple 16-bit CPU by myself. I gradually realized that the use of certain CPUs is not the most important knowledge, but the more important content is the principles of computer composition and computer architecture (x86 ARM RISC-V). A deep understanding of computer basic knowledge can allow us to understand the same-to-size when learning new computing hardware, and we won’t find it too difficult to learn some new heterogeneous computing technologies, such as GPU, TPU and NPU.

Various programming languages are essential tools for engineers. If I were asked to say whether I needed to constantly learn new programming languages, the answer was yes. But I think the most important thing is not the programming language itself, but the language design idea and its applicable scenarios. What is furthermore is the basic knowledge used to create programming languages, such as compilation principles. These underlying technologies are the knowledge foundation for building a new language. It becomes very easy to understand what’s behind a programming language and learn and use a new language.

The problem with the rapid positioning system is a necessary capability for every embedded engineer, so how to effectively debug it? I once discussed this issue with a real expert colleague, and he said: Can I say that I mainly rely on thinking? This answer is obviously too brief, but we have to admit that Debug is indeed based on thinking. The key to the problem is how should you think?

Recently, I read a book called "How to solve it" which gave me some inspiration and made me realize that this is a problem of thinking. The process of solving a bug is very similar to solving a math problem.

If engineers want to improve debugging capabilities, they must often think about this problem: this solution seems feasible and seems to be correct, but how can they think of such a solution? This experiment seems to be feasible, which seems to be a fact, but how was this fact discovered? And how can I think of them or discover them myself? At work, you must not only try your best to understand the solutions of various bugs, but also understand the motivations and steps of this solution, and try your best to explain these motivations and steps to others.