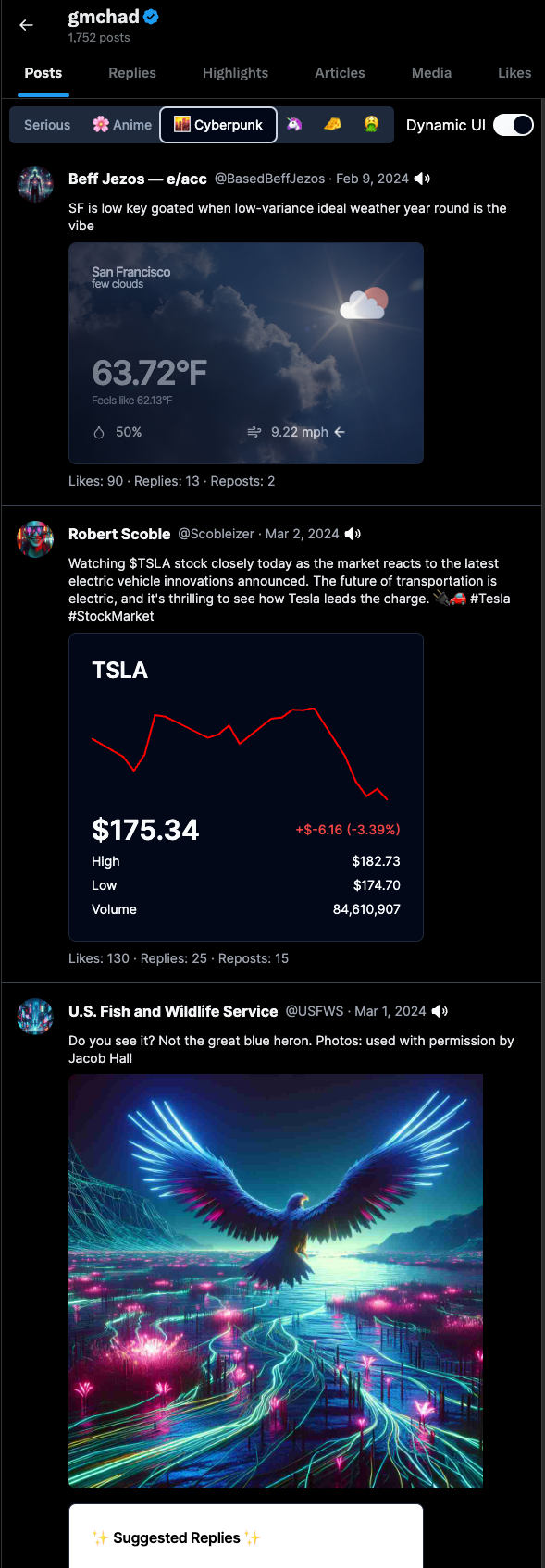

Generative-X (twitter) augments your twitter timeline with AI using image filters, text-to-speech, auto replies, and dynamic UI components that pop in to give more context to tweets!

Built during the SPCxOpenAI Hackathon

Watch the video on X

Under the hood, there's a nextjs application and a chrome extension used to pull tweets off of your feed and inject the nextjs app as an iframe into X

Let's start by running the nextjs app wwhich will use a sample twitter feed

cp .env.local.example .env.localnpm run devYou'll need to load the extension into your browser to use it

chrome_extensions/src folder (where this file is)src folder at the same level of this file

There are currently 5 dynamic components that can be rendered based on tweet context. We use GPT3.5 with function calling to determine which component to render.

Dynamic User Interfaces (DUIs) can be found in /app/components/dui

weather.tsx

Renders live weather data if location and "weather" is mentioned in a tweet

stocks.tsx

Renders live stock data if a ticker symbol i.e $TSLA is mentioned in a tweet

poltics.tsx

Renders a political scale with refeference links (generated from perplexity sonar) if a tweet is poltical

clothing.tsx

This component will try to match the clothing items in a tweet image to items in the Nordstrom Rack catalog. For the demo it will only render for tweets under the @TechBroDrip

Reply.tsx

Renders a few suggested replies with tts in a reply component. This is the default component is there are not other components rendered.

This application gets better with more components. If you have ideas for components that could augment the X experience, open a PR.

Docs on adding new components flow coming soon.

actions/tsx and into it's own api (there is currently an issue where server action calls are not parallelized in production see vercel/next.js#50743)