Simplified Chinese English

Telegram Channel

For more historical updates, see Version Record

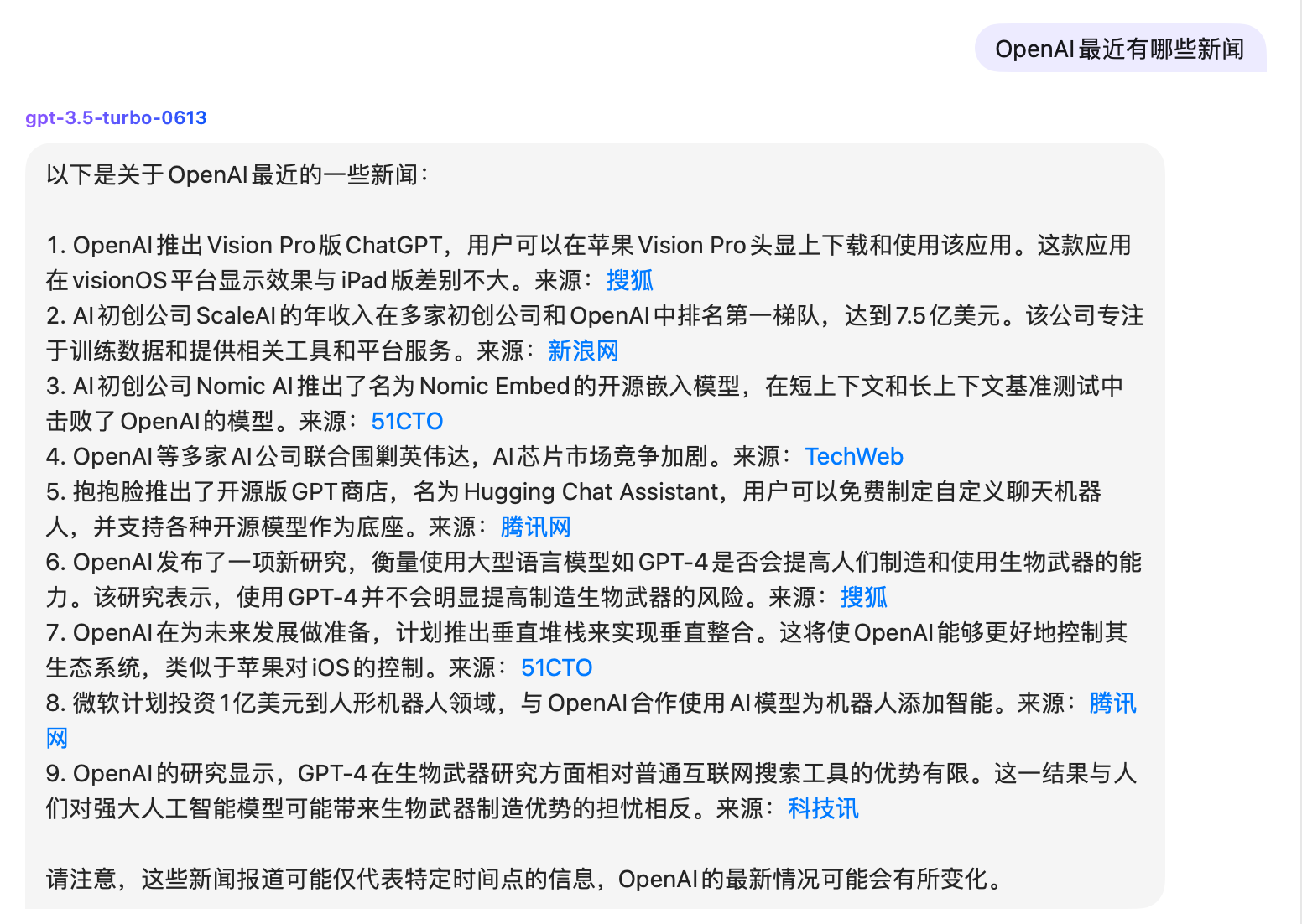

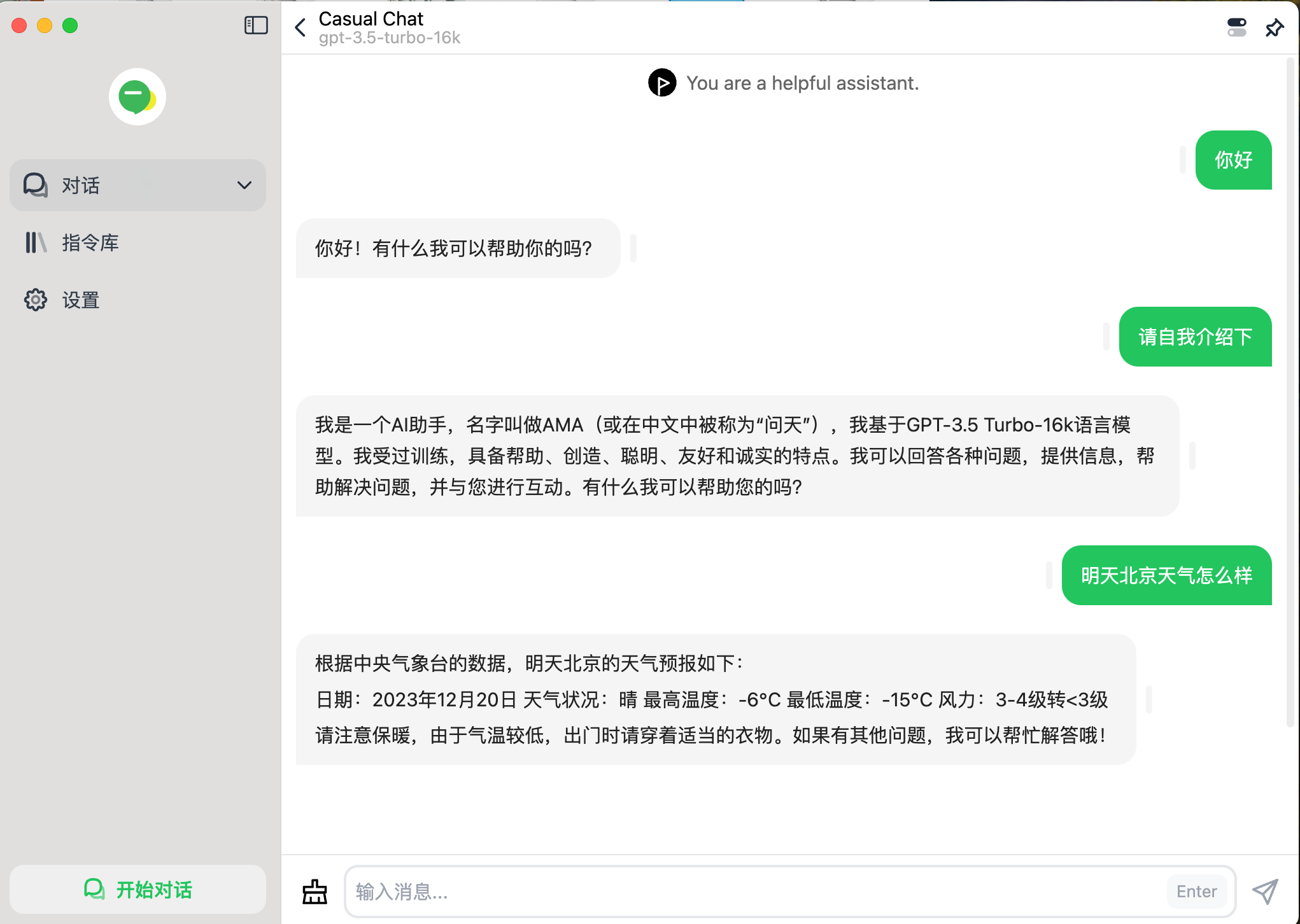

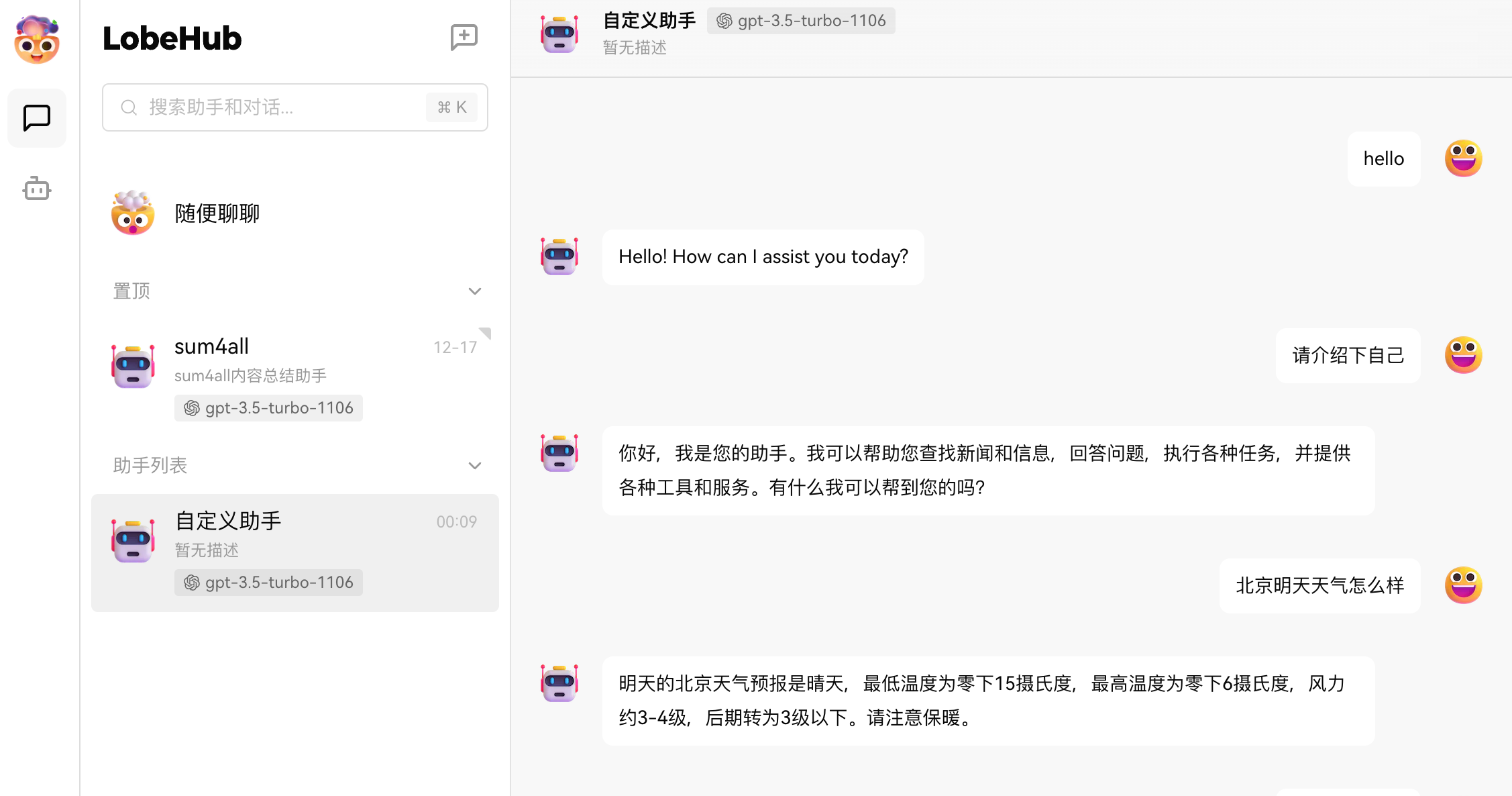

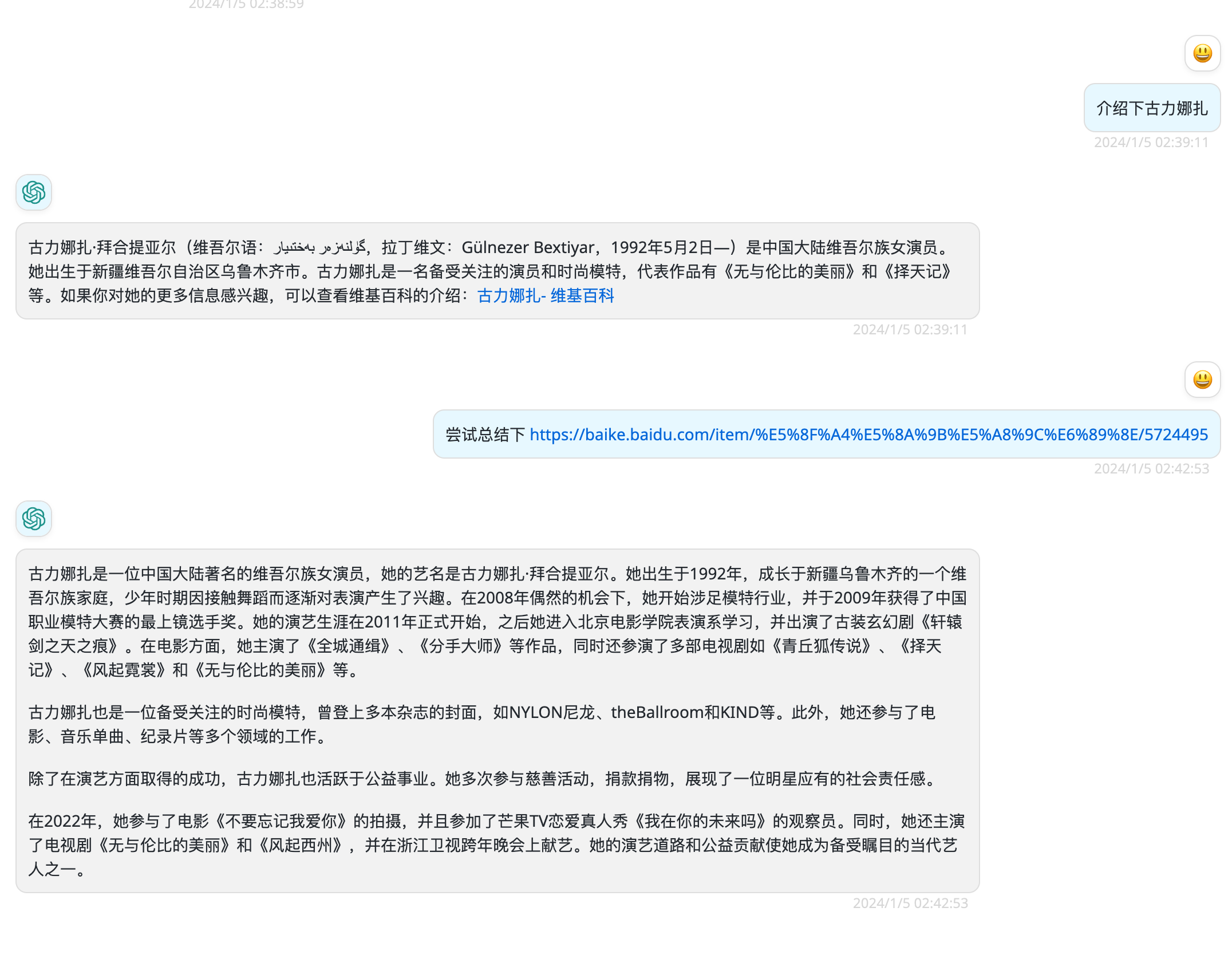

Let your big model API support networking, search, news, and web page summary. It has supported OpenAI, Gemini, and Moonshot (non-streaming). The big model will determine whether it is connected to the Internet based on your input. It does not search online every time. It does not require any plug-ins or replacement of keys. It can directly replace the custom address on your commonly used three-party client. It also supports self-deployment and will not affect other functions used, such as drawings, voice, etc.

|  |

|  |

| Model | Function | Streaming output | Deployment method |

|---|---|---|---|

OpenAI | Internet connection, news, content crawling | Streaming, non-stream | Zeabur, on-premises deployment, Cloudflare Worker, Vercel |

Azure OpenAI | Internet connection, news, content crawling | Streaming, non-stream | Cloudflare Worker |

Groq | Internet connection, news, content crawling | Streaming, non-stream | Cloudflare Worker |

Gemini | networking | Streaming, non-stream | Cloudflare Worker |

Moonshot | Internet connection, news, content crawling | Partial flow, non-flow | Zeabur, local deployment, Cloudflare Worker (streaming), Vercel |

Replace the client's custom domain name for your deployed address

|

Zeabur One-click deployment

Click the button to deploy in one click and modify environment variables

If you need to keep your project updated, it is recommended to fork this repository first and then deploy your branch through Zeabur

Local deployment

git clone https://github.com/fatwang2/search2ai

cd api && nohup node index.js > output.log 2>&1 & tail -f output.log

http://localhost:3014/v1/chat/completions

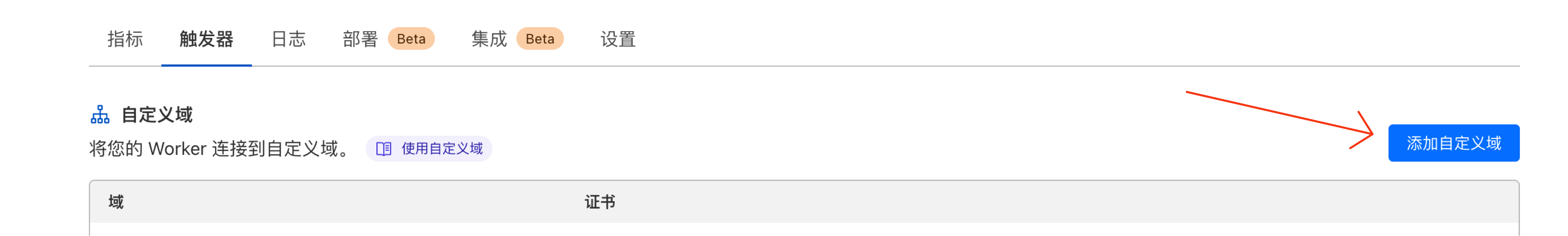

Cloudflare Worker Deployment

Vercel deployment

Special note: The vercel project does not support streaming output for the time being, and it has a 10s response limit. The actual user experience is not good. I mainly want to wait for the master to pull request for me.

One-click deployment

To ensure updates, you can also fork this project first and then deploy it on vercel yourself.

This project provides some additional configuration items, set through environment variables:

| Environment variables | Is it necessary | describe | example |

|---|---|---|---|

SEARCH_SERVICE | Yes | Your search service, what service you choose, what service key you need to configure | search1api, google, bing, serpapi, serper, duckduckgo, searxng |

APIBASE | No | Third-party proxy address | https://api.openai.com, https://api.moonshot.cn, https://api.groq.com/openai |

MAX_RESULTS | Yes | Number of search results | 10 |

CRAWL_RESULTS | No | The number of in-depth searches (get the web page body after searching) is currently only supported for search1api, and the depth speed will be slow | 1 |

SEARCH1API_KEY | No | If you choose search1api, I will build my own search service, which is fast and cheap. The application address is https://search1api.com | xxx |

BING_KEY | No | If you choose Bing to search, please search for the tutorial yourself. The application address is https://search2ai.online/bing | xxx |

GOOGLE_CX | No | If you select Google search, please search the tutorial yourself. The application address is https://search2ai.online/googlecx | xxx |

GOOGLE_KEY | No | If you select Google search, API key, the application address is https://search2ai.online/googlekey | xxx |

SERPAPI_KEY | No | If you choose serpapi, you can fill it in for 100 times/month. The registered address is https://search2ai.online/serpapi | xxx |

SERPER_KEY | No | If you choose serper, the free quota for 2,500 times in 6 months is required. The registered address is https://search2ai.online/serper | xxx |

SEARXNG_BASE_URL | No | If you choose the required serialxng, fill in the self-built serialXNG service domain name. Tutorial https://github.com/searxng/searxng, you need to open the json mode | https://search.xxx.xxx |

OPENAI_TYPE | No | Openai supply source, default is openai | openai, azure |

RESOURCE_NAME | No | Required if selecting Azure | xxxx |

DEPLOY_NAME | No | Required if selecting Azure | gpt-35-turbo |

API_VERSION | No | Required if selecting Azure | 2024-02-15-preview |

AZURE_API_KEY | No | Required if selecting Azure | xxxx |

AUTH_KEYS | No | If you want the user to define the authorization code as the key separately when requesting, you need to fill it in. If you choose Azure, you must fill it in it. | 000,1111,2222 |

OPENAI_API_KEY | No | If you want the user to define the authorization code as the key when requesting openai, you need to fill in it | sk-xxx |