CLIP-ImageSearch-NCNN

Idea : I think this project is very suitable for Yongcheng mobile phone's own photo album search function, sneaking out every picture in the background to extract features, so that the results can be produced in seconds when used in the end. It also provides the matching probability of each image, which can be displayed from high to low according to the probability to return the result of the image stream.

Why do it ? Actually, I wanted to do CLIP because I wanted to do GPT before. At that time, I thought that since I wanted to do GPT, why didn’t I do CLIP? (Shamelessly leading the stream GPT2-ChineseChat-NCNN)

What model to make : CLIP is the same as GPT, with many gameplay. I chose it in awesome-CLIP. I took a look at the natural-language-image-search project at a glance. I gave a sentence to describe the picture I wanted and could search from the gallery that meets the requirements. When I saw this project, I knew that this was a function that was born to fit in mobile phone albums. Why not do it if that is the case?

Work objective : Use ncnn to deploy CLIP to retrieve images in natural language. The goal is to give demos on x86 and Android

PS : Busy work, slow update, only one star

Note : To facilitate everyone to download, all models and execution files have been uploaded to Github. You can download them from Github: https://github.com/EdVince/model_zoo/releases/tag/CLIP-ImageSearch-NCNN

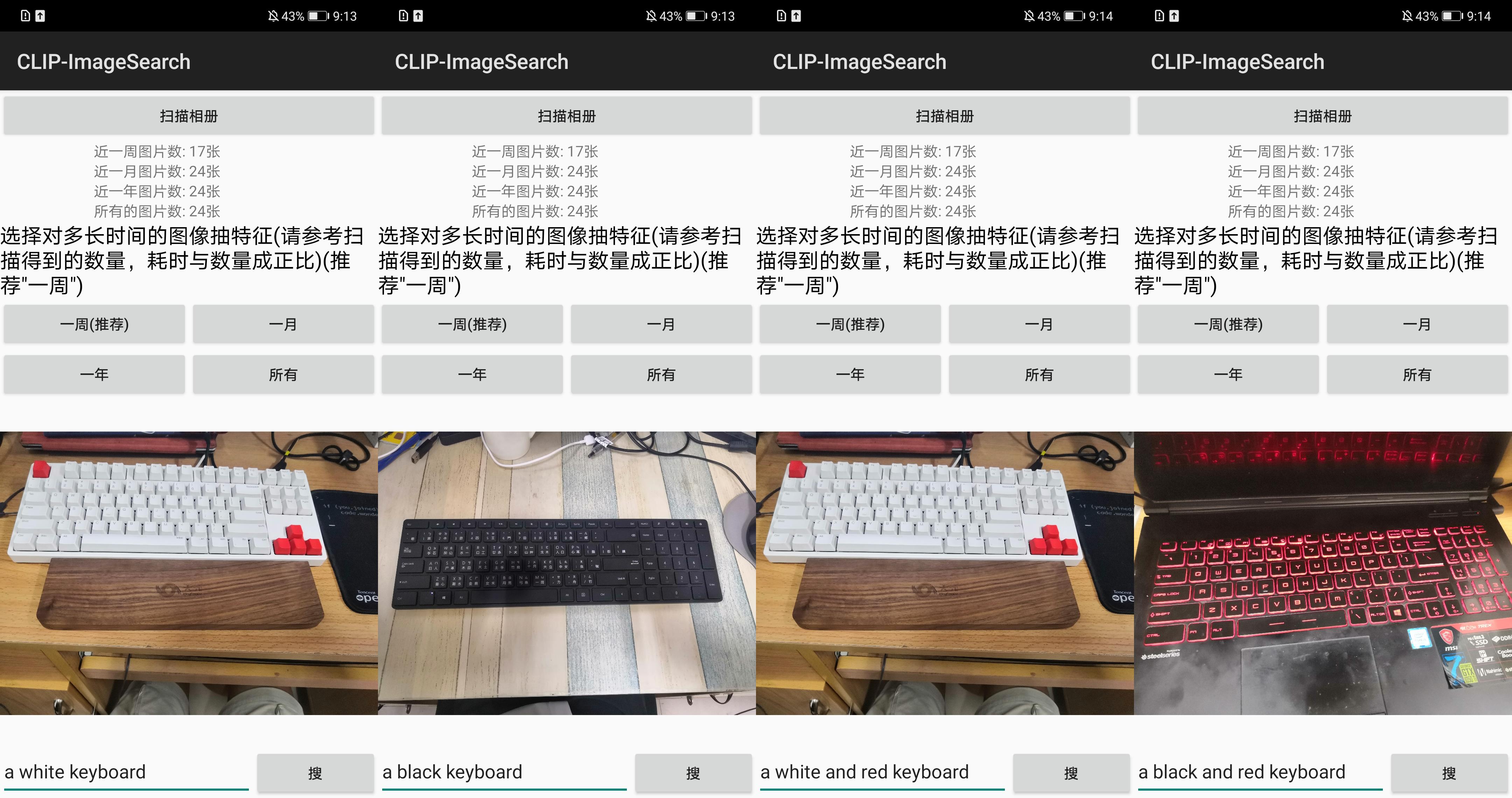

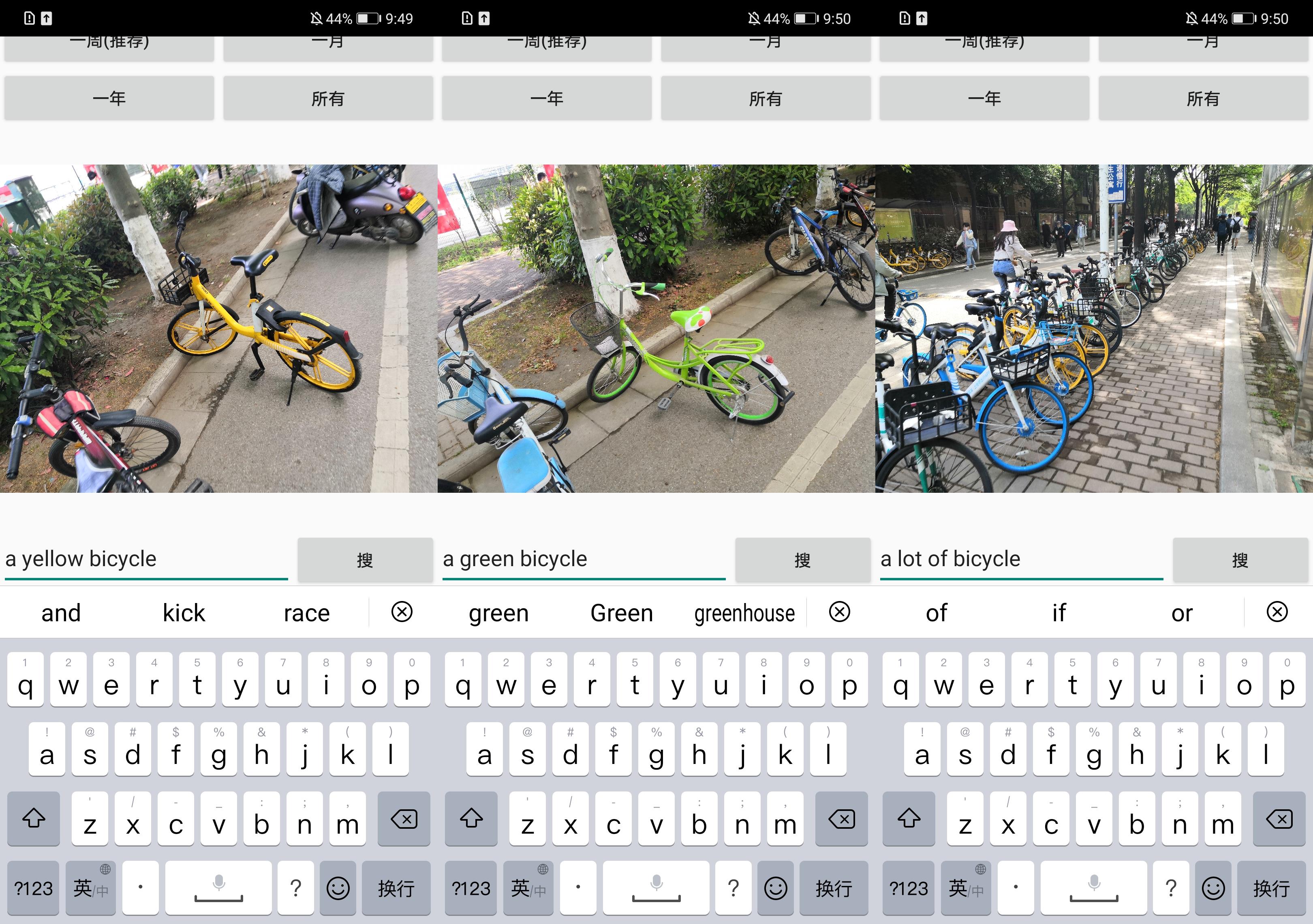

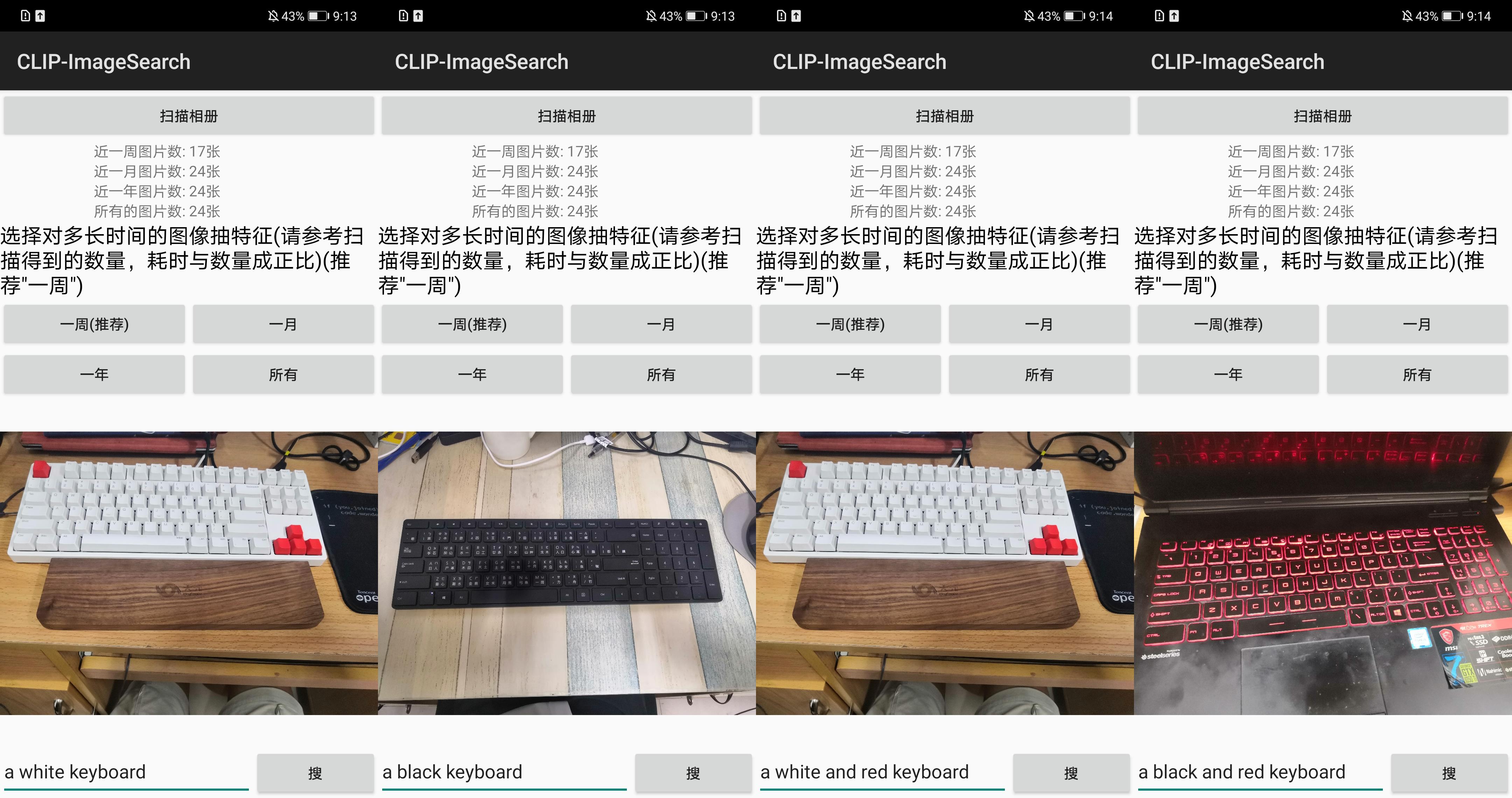

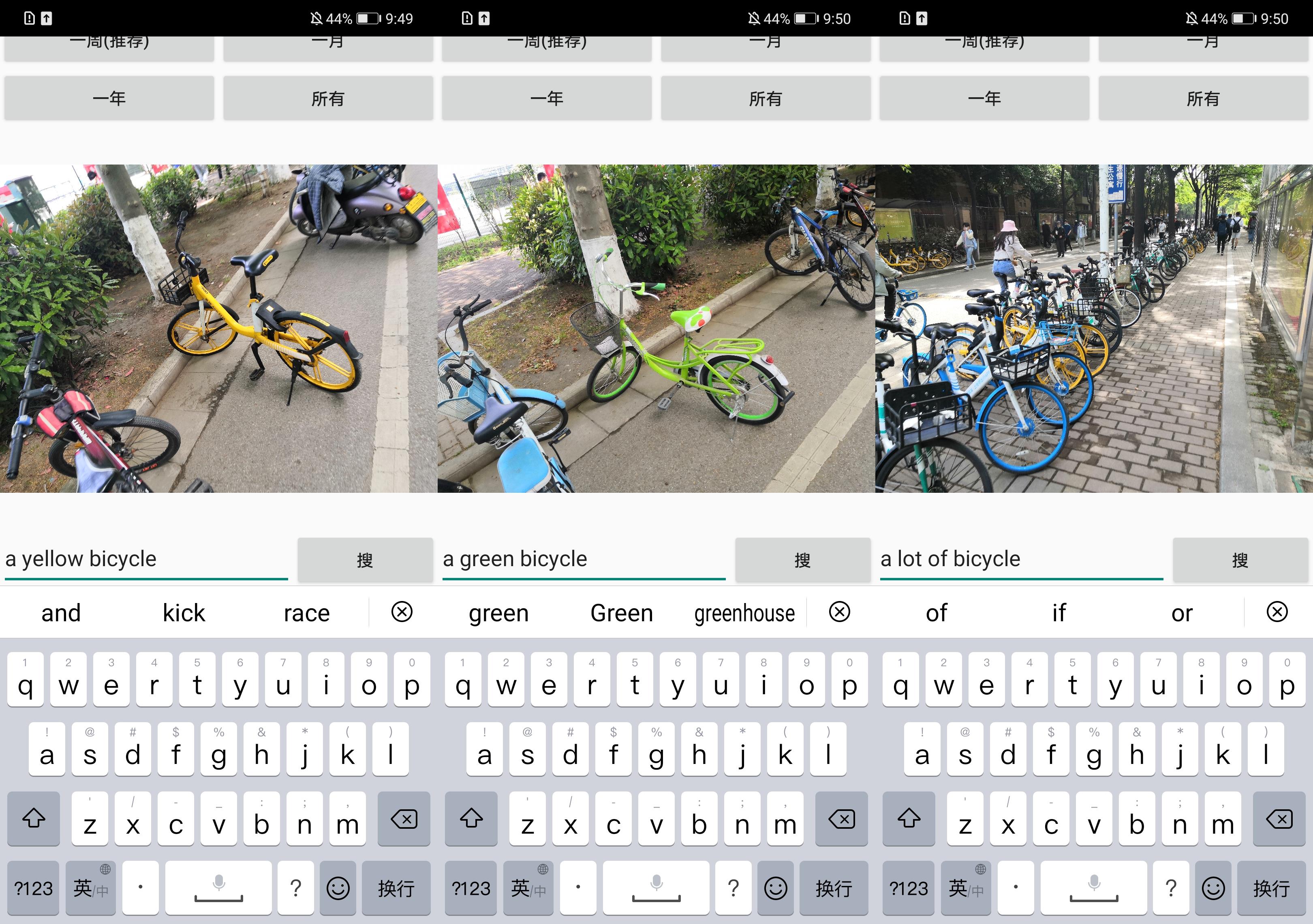

Demo

android: Apk that can be run directly

usage:

- Click "Scan the Album" first and scan some pictures on your phone

- According to the number of pictures in each time period, select a suitable feature extraction (the time is proportional to the number, a picture on Kirin 970 is 0.5s, slowly, etc.)

- Enter the content you want to search in the bottom box, be sure to use English! ! !

- Finally, click "Search" and you will get the results (the results will be released in 1.5s on Kirin 970)

x86: Exe that can run directly

Usage: Just click in the order of the buttons, for example:

- First click "1.Select gallery" and select the gallery folder of the repo

- Then click "2. Extract the gallery features" to extract the characteristics of all pictures in the selected folder. The time is related to the number of pictures.

- If you enter a sentence in the text box to describe the picture you want, you must use English, it doesn't matter if you want it.

- Finally, click "4.Search", and the program will automatically return to the picture that best matches the input text in the image library.

How it works

- Use CLIP's encode_image to extract the features of the image and build a library's feature vector

- Use CLIP's encode_text to extract the features of text and build text feature vector

- Find similarity between two feature vectors, which can be matched in two directions, can be matched with text, or can be matched with text

- You can get the similarity of all pictures. For the sake of being lazy, I only showed the one with the highest probability of matching.

- After a little modification, you can make it a function of searching pictures in your mobile album.

- Supports searching pictures with pictures, searching pictures with characters, searching characters with pictures, and even searching characters with characters. . . There are many ways to play, just use those features to find similar issues, so I won't go into details

- The most time-consuming thing is actually building the feature vectors of all pictures in the gallery. Here I am using the "RN50" model, and using resnet50 to extract features for pictures.

Repo structure

- android: The source code of the apk program provided

- x86: The source code of the provided exe program, based on qt

- gallery: A small gallery for testing

- resources: README's resource folder

Work content

refer to

- ncnn

- CLIP

- natural-language-image-search