A mini-framework for evaluating LLM performance on the Bulls and Cows number guessing game, supporting multiple providers through LiteLLM.

Note

TLDR: Bulls and Cows is a code-breaking game for two players. A player writes a 4-digit secret number. The digits must be all different (e.g., 1234). Then another player (an LLM in this case) tries to guess the secret number (e.g., 1246). For every guess the info of number of matches is returned. If the matching digits are in their right positions, they are "bulls" (two bulls in this example: 1 and 2), if in different positions, they are "cows" (one cow, 4). The correct solution requires Reasoning-to think of the next good guess-and In-Context memory-to learn from the past answers. It is proved that any 4-digit secret number can be solved within seven turns.

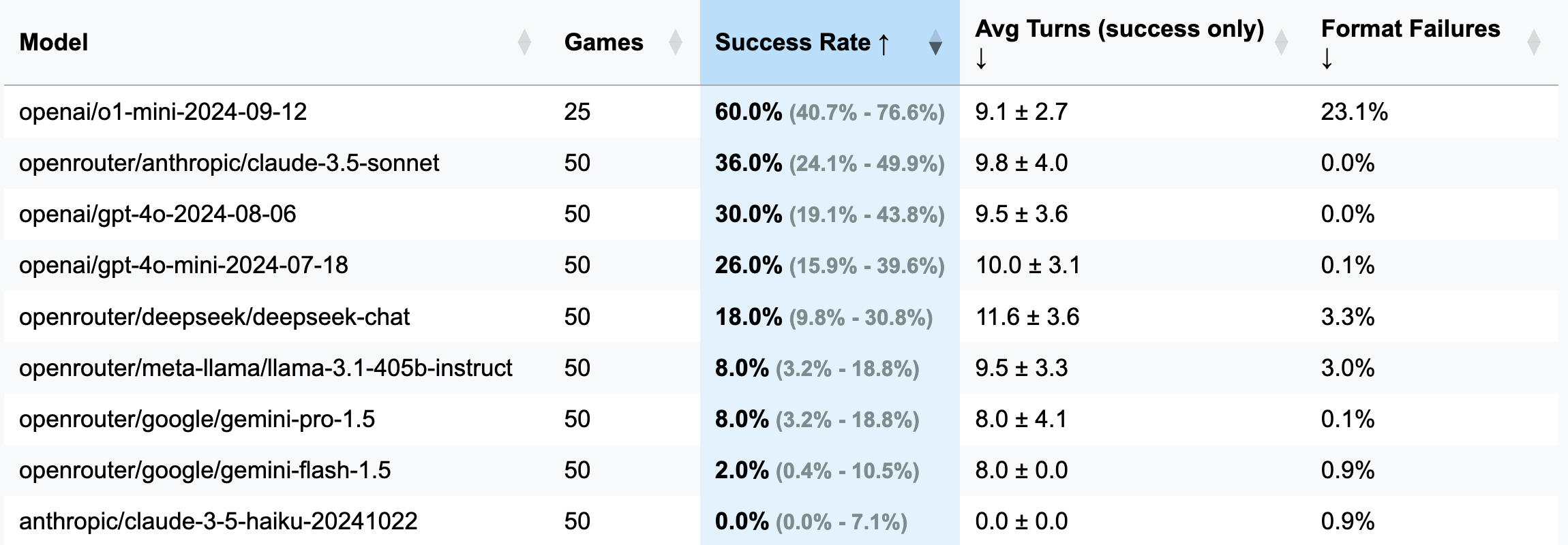

| Model | Games | Success Rate | Avg Turns (success only) | Format Failures (Turns) |

|---|---|---|---|---|

| openai/o1-mini-2024-09-12 | 25 | 60.0% [40.7%; 76.6%] | 9.1±2.7 | 23.1% |

| openrouter/anthropic/claude-3.5-sonnet | 50 | 36.0% [24.1%; 49.9%] | 9.8±4.0 | 0.0% |

| openai/gpt-4o-2024-08-06 | 50 | 30.0% [19.1%; 43.8%] | 9.5±3.6 | 0.0% |

| openai/gpt-4o-mini-2024-07-18 | 50 | 26.0% [15.9%; 39.6%] | 10.0±3.1 | 0.1% |

| openrouter/deepseek/deepseek-chat | 50 | 18.0% [9.8%; 30.8%] | 11.6±3.6 | 3.3% |

| openrouter/meta-llama/llama-3.1-405b-instruct | 50 | 8.0% [3.2%; 18.8%] | 9.5±3.3 | 3.0% |

| openrouter/google/gemini-pro-1.5 | 50 | 8.0% [3.2%; 18.8%] | 8.0±4.1 | 0.1% |

| openrouter/google/gemini-flash-1.5 | 50 | 2.0% [0.4%; 10.5%] | 8.0±0.0 | 0.9% |

| anthropic/claude-3-5-haiku-20241022 | 50 | 0.0% [0.0%; 7.1%] | 0.0±0.0 | 0.9% |

Important

For most of the runs, 50 games were played (excl. o1-mini), thus, Confidence Intervals are wide. If you'd like to spend $100-200 in API credits on tests to achieve more accurate results and make CIs narrower, please feel free to reach me or open a PR with your results.

GUESS: 1234 (defined in prompts file).strip() was added to address this).o1-mini, often forgets formatting rules and tries to add bold emphasis to the response. This behavior was deemed unacceptable and counted as an error and a wasted turn, as the instruction explicitly specifies the required formatting.

o1-mini's behaviour).3 Digits (Debug Version: fewer turns, shorter reasoning):

openai/gpt-4o-mini-2024-07-18: 283k cached + 221k uncached + 68k output = $0.1 (recommended for debug)

openai/gpt-4o-2024-08-06: 174k cached + 241k uncached + 56k output = $1.38

openai/gpt-4-turbo-2024-04-09: UNKNOWN = $6.65

openai/o1-mini-2024-09-12: 0k cached + 335k uncached + 1345k output = $17.15

anthropic/claude-3-haiku-20240307: 492k input + 46k output = $0.18

4 Digits (Main Version):

openai/gpt-4o-mini-2024-07-18: 451k cached + 429k uncached + 100k output = $0.15

openai/gpt-4o-2024-08-06: 553k cached + 287k uncached + 87k = $2.29

(25 games) openai/o1-mini-2024-09-12: 0k cached + 584k uncached + 1815k output = $23.54

anthropic/claude-3-5-haiku-20241022: 969k input + 90k output = $1.42

openrouter/anthropic/claude-3.5-sonnet (new): UNKNOWN = $5.2

This framework came into existence thanks to a curious comment from a subscriber of my Telegram channel. They claimed to have tested various LLMs in a game of Bulls and Cows, concluding that none could solve it and, therefore, LLMs can’t reason. Intrigued, I asked for examples of these so-called "failures," only to be told the chats were deleted. Convenient. Later, they mentioned trying o1-preview, which apparently did solve it—in about 20 moves, far from the 7 moves considered optimal.

Meanwhile, I had been looking for an excuse to experiment with OpenHands, and what better way than to challenge Copilot to spin up an LLM benchmark from scratch? After three evenings of half-hearted effort (I was playing STALKER 2 simultaneously), this benchmark was born—a product of equal parts apathy and the desire to prove a point no one asked for. Enjoy!

pip install -r requirements.txt

pre-commit install(Optional) To understand the logic, read all the prompts here.

Configure your LLM provider's API keys as environment variables (either direclty in your terminal or using .env file). I recommend using either OpenAI or Anthropic keys, and OpenRouter for anything else.

Adjust config/default_config.yaml with your desired model and game settings. Use run_id to store different runs in separate folders — otherwise result folders will be named with timestamps.

The main fields are: model, target_length (how many digits in the secret number), num_concurrent_games (to get around laughable TPS API limits. E.g., for Anthropic Tier 2 I do not recommend setting this value above 2, while OpenAI easily could support 8-10 concurrent games).

Run the benchmark and visualize results of all runs:

python run_benchmark.py

python scripts/visualize_results.pyResults will be available in HTML (with additional plots) and Markdown.

The benchmark evaluates LLMs on three key aspects:

Results are saved with full game histories (including conversation logs, e.g., here) and configurations for detailed analysis.

The project uses Black (line length: 100) and isort for code formatting. Pre-commit hooks ensure code quality by checking:

Run manual checks with:

pre-commit run --all-filesRun tests (yes, there are tests on game logic, answer parsing & validation):

python -m pytest . -v