chat_gpt_sdk

3.1.3

Chatgpt ist ein Chat-Bot, der im November 2022 von OpenAI gestartet wurde. Es basiert auf OpenAs GPT-3,5-Familie großer Sprachmodelle.

"Community-gepflegt" Bibliothek.

chat_gpt_sdk : 3.1 . 3 final openAI = OpenAI .instance. build (token : token,baseOption : HttpSetup (receiveTimeout : const Duration (seconds : 5 )),enableLog : true );openAI. setToken ( 'new-access-token' );

///get token

openAI.token;Text vollständiger API

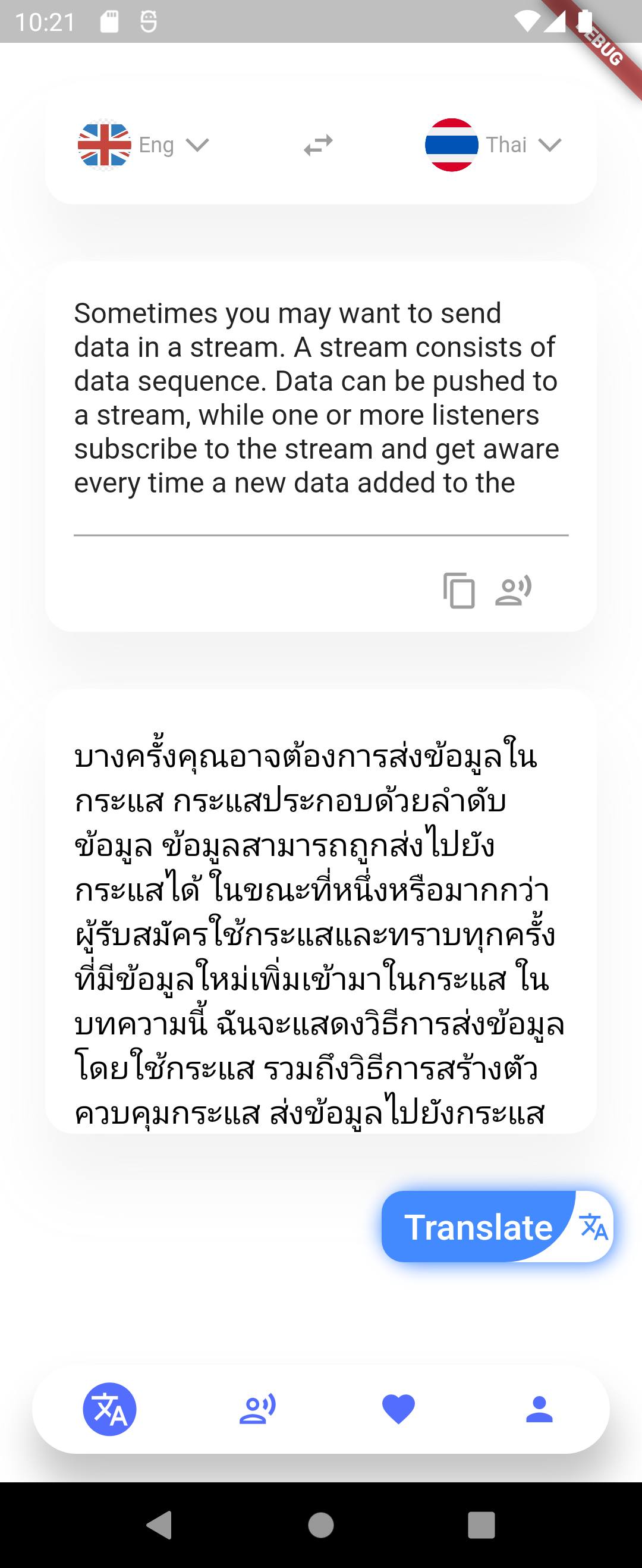

void _translateEngToThai () async {

final request = CompleteText (

prompt : translateEngToThai (word : _txtWord.text. toString ()),

maxToken : 200 ,

model : TextDavinci3Model ());

final response = await openAI. onCompletion (request : request);

///cancel request

openAI. cancelAIGenerate ();

print (response);

} Future < CTResponse ?> ? _translateFuture;

_translateFuture = openAI. onCompletion (request : request);

///ui code

FutureBuilder < CTResponse ?>(

future : _translateFuture,

builder : (context, snapshot) {

final data = snapshot.data;

if (snapshot.connectionState == ConnectionState .done) return something

if (snapshot.connectionState == ConnectionState .waiting) return something

return something

}) void completeWithSSE () {

final request = CompleteText (

prompt : "Hello world" , maxTokens : 200 , model : TextDavinci3Model ());

openAI. onCompletionSSE (request : request). listen ((it) {

debugPrint (it.choices.last.text);

});

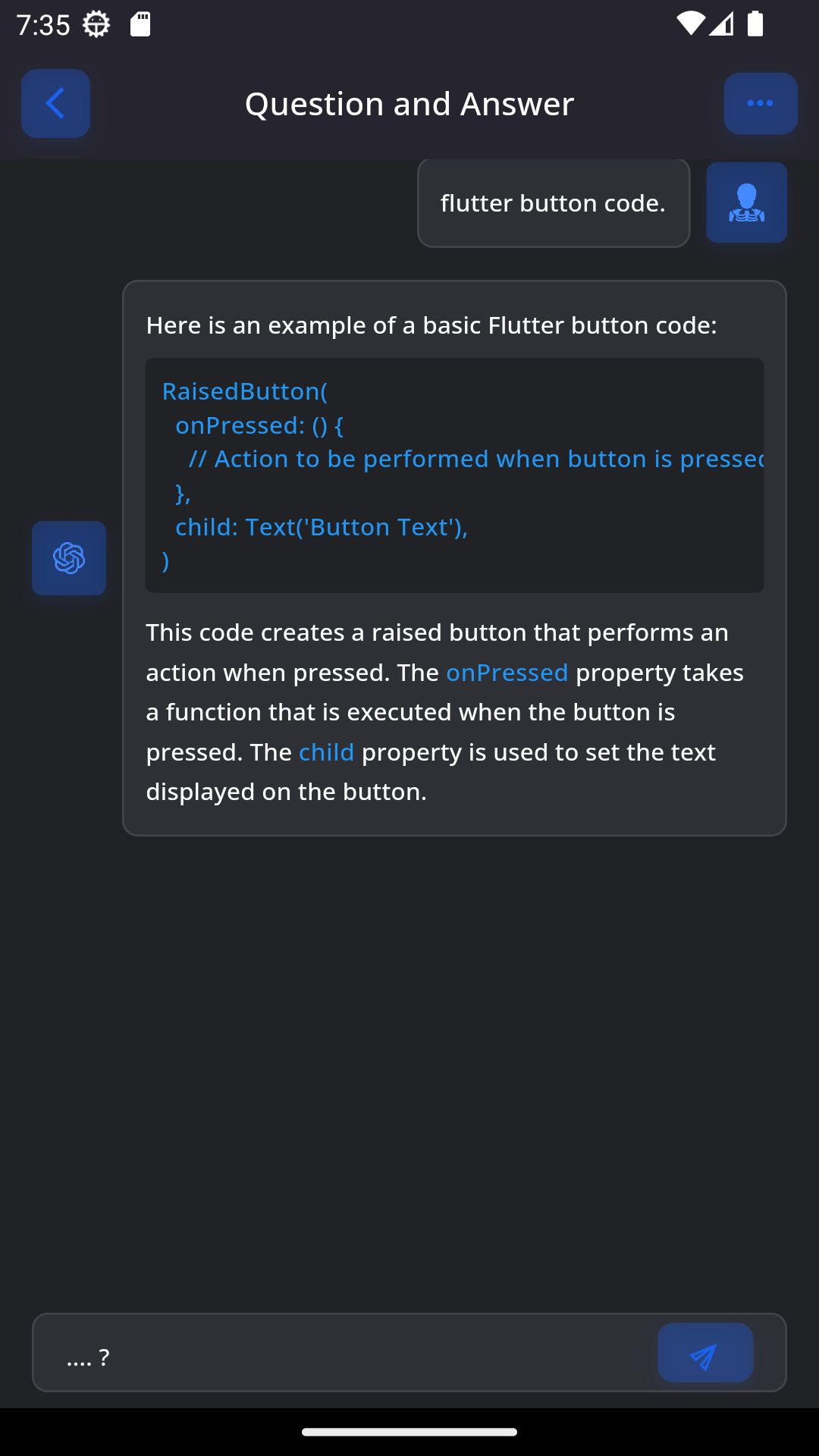

} void chatComplete () async {

final request = ChatCompleteText (messages : [

Map . of ({ "role" : "user" , "content" : 'Hello!' })

], maxToken : 200 , model : Gpt4ChatModel ());

final response = await openAI. onChatCompletion (request : request);

for ( var element in response ! .choices) {

print ( "data -> ${ element . message ?. content }" );

}

} void chatCompleteWithSSE () {

final request = ChatCompleteText (messages : [

Map . of ({ "role" : "user" , "content" : 'Hello!' })

], maxToken : 200 , model : Gpt4ChatModel ());

openAI. onChatCompletionSSE (request : request). listen ((it) {

debugPrint (it.choices.last.message ? .content);

});

} void chatCompleteWithSSE () {

final request = ChatCompleteText (messages : [

Map . of ({ "role" : "user" , "content" : 'Hello!' })

], maxToken : 200 , model : GptTurboChatModel ());

openAI. onChatCompletionSSE (request : request). listen ((it) {

debugPrint (it.choices.last.message ? .content);

});

} void chatComplete () async {

final request = ChatCompleteText (messages : [

Map . of ({ "role" : "user" , "content" : 'Hello!' })

], maxToken : 200 , model : Gpt41106PreviewChatModel ());

final response = await openAI. onChatCompletion (request : request);

for ( var element in response ! .choices) {

print ( "data -> ${ element . message ?. content }" );

}

} void gptFunctionCalling () async {

final request = ChatCompleteText (

messages : [

Messages (

role : Role .user,

content : "What is the weather like in Boston?" ,

name : "get_current_weather" ),

],

maxToken : 200 ,

model : Gpt41106PreviewChatModel (),

tools : [

{

"type" : "function" ,

"function" : {

"name" : "get_current_weather" ,

"description" : "Get the current weather in a given location" ,

"parameters" : {

"type" : "object" ,

"properties" : {

"location" : {

"type" : "string" ,

"description" : "The city and state, e.g. San Francisco, CA"

},

"unit" : {

"type" : "string" ,

"enum" : [ "celsius" , "fahrenheit" ]

}

},

"required" : [ "location" ]

}

}

}

],

toolChoice : 'auto' ,

);

ChatCTResponse ? response = await openAI. onChatCompletion (request : request);

} void imageInput () async {

final request = ChatCompleteText (

messages : [

{

"role" : "user" ,

"content" : [

{ "type" : "text" , "text" : "What’s in this image?" },

{

"type" : "image_url" ,

"image_url" : { "url" : "image-url" }

}

]

}

],

maxToken : 200 ,

model : Gpt4VisionPreviewChatModel (),

);

ChatCTResponse ? response = await openAI. onChatCompletion (request : request);

debugPrint ( "$ response " );

} void createAssistant () async {

final assistant = Assistant (

model : Gpt4AModel (),

name : 'Math Tutor' ,

instructions :

'You are a personal math tutor. When asked a question, write and run Python code to answer the question.' ,

tools : [

{

"type" : "code_interpreter" ,

}

],

);

await openAI.assistant. create (assistant : assistant);

}

void createAssistantFile () async {

await openAI.assistant. createFile (assistantId : '' ,fileId : '' ,);

} void listAssistant () async {

final assistants = await openAI.assistant. list ();

assistants. map ((e) => e. toJson ()). forEach (print);

} void listAssistantFile () async {

final assistants = await openAI.assistant. listFile (assistantId : '' );

assistants.data. map ((e) => e. toJson ()). forEach (print);

} void retrieveAssistant () async {

final assistants = await openAI.assistant. retrieves (assistantId : '' );

} void retrieveAssistantFiles () async {

final assistants = await openAI.assistant. retrievesFile (assistantId : '' ,fileId : '' );

} void modifyAssistant () async {

final assistant = Assistant (

model : Gpt4AModel (),

instructions :

'You are an HR bot, and you have access to files to answer employee questions about company policies. Always response with info from either of the files.' ,

tools : [

{

"type" : "retrieval" ,

}

],

fileIds : [

"file-abc123" ,

"file-abc456" ,

],

);

await openAI.assistant. modifies (assistantId : '' , assistant : assistant);

} void deleteAssistant () async {

await openAI.assistant. delete (assistantId : '' );

} void deleteAssistantFile () async {

await openAI.assistant. deleteFile (assistantId : '' ,fileId : '' );

}openAI.assistant.v2; ///empty body

void createThreads () async {

await openAI.threads. createThread (request : ThreadRequest ());

}

///with message

void createThreads () async {

final request = ThreadRequest (messages : [

{

"role" : "user" ,

"content" : "Hello, what is AI?" ,

"file_ids" : [ "file-abc123" ]

},

{

"role" : "user" ,

"content" : "How does AI work? Explain it in simple terms."

},

]);

await openAI.threads. createThread (request : request);

} void retrieveThread () async {

final mThread = await openAI.threads. retrieveThread (threadId : 'threadId' );

} void modifyThread () async {

await openAI.threads. modifyThread (threadId : 'threadId' , metadata : {

"metadata" : {

"modified" : "true" ,

"user" : "abc123" ,

},

});

} void deleteThread () async {

await openAI.threads. deleteThread (threadId : 'threadId' );

}openAI.threads.v2; void createMessage () async {

final request = CreateMessage (

role : 'user' ,

content : 'How does AI work? Explain it in simple terms.' ,

);

await openAI.threads.messages. createMessage (

threadId : 'threadId' ,

request : request,

);

} void listMessage () async {

final mMessages = await openAI.threads.messages. listMessage (threadId : 'threadId' );

} void listMessageFile () async {

final mMessagesFile = await openAI.threads.messages. listMessageFile (

threadId : 'threadId' ,

messageId : '' ,

);

} void retrieveMessage () async {

final mMessage = await openAI.threads.messages. retrieveMessage (

threadId : 'threadId' ,

messageId : '' ,

);

} void retrieveMessageFile () async {

final mMessageFile = await openAI.threads.messages. retrieveMessageFile (

threadId : 'threadId' ,

messageId : '' ,

fileId : '' ,

);

} void modifyMessage () async {

await openAI.threads.messages. modifyMessage (

threadId : 'threadId' ,

messageId : 'messageId' ,

metadata : {

"metadata" : { "modified" : "true" , "user" : "abc123" },

},

);

}openAI.threads.v2.messages; void createRun () async {

final request = CreateRun (assistantId : 'assistantId' );

await openAI.threads.runs. createRun (threadId : 'threadId' , request : request);

} void createThreadAndRun () async {

final request = CreateThreadAndRun (assistantId : 'assistantId' , thread : {

"messages" : [

{ "role" : "user" , "content" : "Explain deep learning to a 5 year old." }

],

});

await openAI.threads.runs. createThreadAndRun (request : request);

} void listRuns () async {

final mRuns = await openAI.threads.runs. listRuns (threadId : 'threadId' );

} void listRunSteps () async {

final mRunSteps = await openAI.threads.runs. listRunSteps (threadId : 'threadId' ,runId : '' ,);

} void retrieveRun () async {

final mRun = await openAI.threads.runs. retrieveRun (threadId : 'threadId' ,runId : '' ,);

} void retrieveRunStep () async {

final mRun = await openAI.threads.runs. retrieveRunStep (threadId : 'threadId' ,runId : '' ,stepId : '' );

} void modifyRun () async {

await openAI.threads.runs. modifyRun (

threadId : 'threadId' ,

runId : '' ,

metadata : {

"metadata" : { "user_id" : "user_abc123" },

},

);

} void submitToolOutputsToRun () async {

await openAI.threads.runs. submitToolOutputsToRun (

threadId : 'threadId' ,

runId : '' ,

toolOutputs : [

{

"tool_call_id" : "call_abc123" ,

"output" : "28C" ,

},

],

);

} void cancelRun () async {

await openAI.threads.runs. cancelRun (

threadId : 'threadId' ,

runId : '' ,

);

}

///using catchError

openAI. onCompletion (request : request)

. catchError ((err){

if (err is OpenAIAuthError ){

print ( 'OpenAIAuthError error ${ err . data ?. error ?. toMap ()}' );

}

if (err is OpenAIRateLimitError ){

print ( 'OpenAIRateLimitError error ${ err . data ?. error ?. toMap ()}' );

}

if (err is OpenAIServerError ){

print ( 'OpenAIServerError error ${ err . data ?. error ?. toMap ()}' );

}

});

///using try catch

try {

await openAI. onCompletion (request : request);

} on OpenAIRateLimitError catch (err) {

print ( 'catch error ->${ err . data ?. error ?. toMap ()}' );

}

///with stream

openAI

. onCompletionSSE (request : request)

. transform ( StreamTransformer . fromHandlers (

handleError : (error, stackTrace, sink) {

if (error is OpenAIRateLimitError ) {

print ( 'OpenAIRateLimitError error ->${ error . data ?. message }' );

}}))

. listen ((event) {

print ( "success" );

}); final request = CompleteText (prompt : 'What is human life expectancy in the United States?' ),

model : TextDavinci3Model (), maxTokens : 200 );

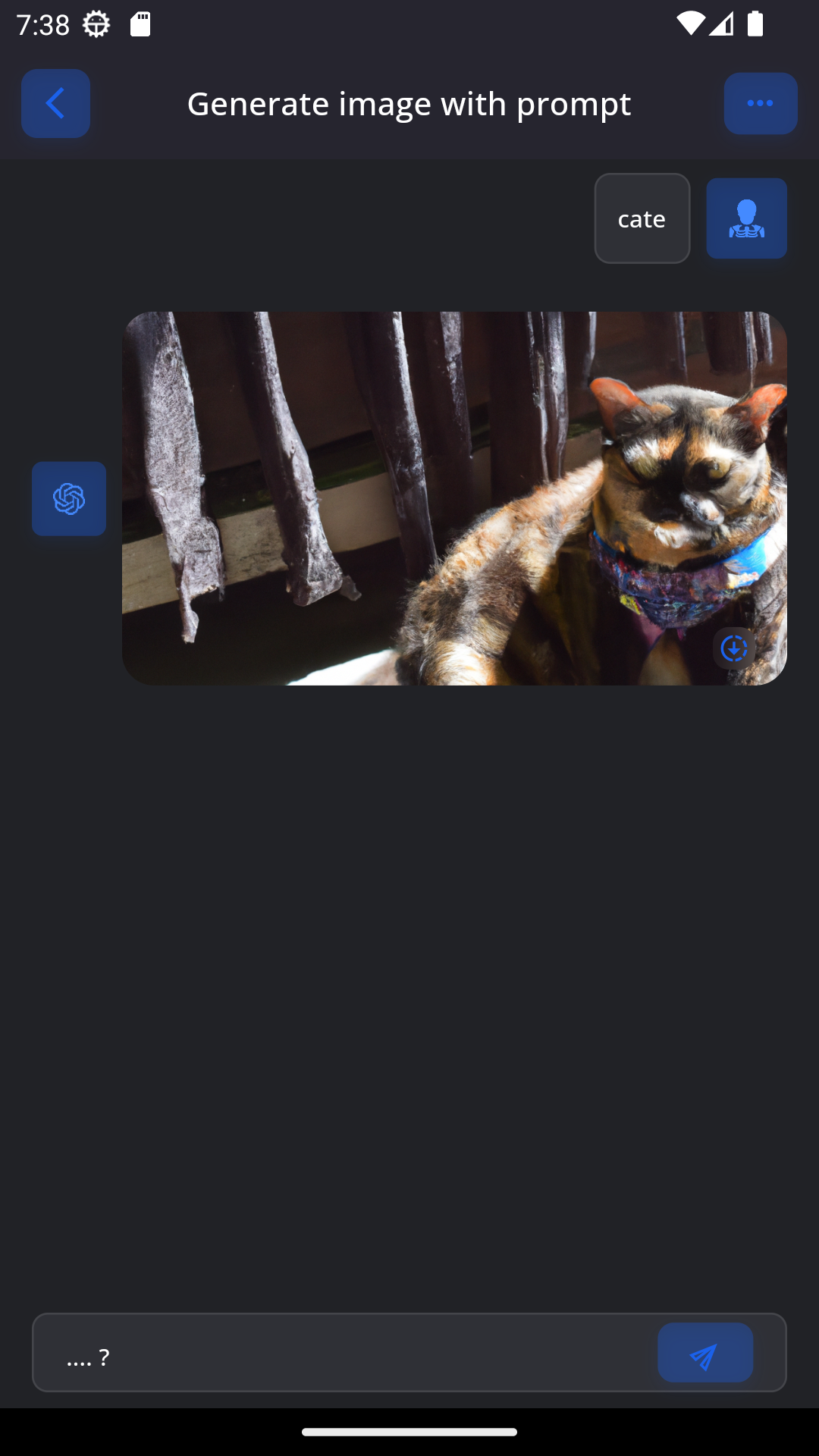

final response = await openAI. onCompletion (request : request); Q : What is human life expectancy in the United States ? A : Human life expectancy in the United States is 78 years.Bild erzeugen

void _generateImage () {

const prompt = "cat eating snake blue red." ;

final request = GenerateImage ( model : DallE2 (),prompt, 1 ,size : ImageSize .size256,

responseFormat : Format .url);

final response = openAI. generateImage (request);

print ( "img url :${ response . data ?. last ?. url }" );

} void editPrompt () async {

final response = await openAI.editor. prompt ( EditRequest (

model : CodeEditModel (),

input : 'What day of the wek is it?' ,

instruction : 'Fix the spelling mistakes' ));

print (response.choices.last.text);

} void editImage () async {

final response = await openAI.editor. editImage ( EditImageRequest (

image : FileInfo ( "${ image ?. path }" , '${ image ?. name }' ),

mask : FileInfo ( 'file path' , 'file name' ),

size : ImageSize .size1024,

prompt : 'King Snake' ),

model : DallE3 (),);

print (response.data ? .last ? .url);

} void variation () async {

final request =

Variation (model : DallE2 (),image : FileInfo ( '${ image ?. path }' , '${ image ?. name }' ));

final response = await openAI.editor. variation (request);

print (response.data ? .last ? .url);

} _openAI

. onChatCompletionSSE (request : request, onCancel : onCancel);

///CancelData

CancelData ? mCancel;

void onCancel ( CancelData cancelData) {

mCancel = cancelData;

}

mCancel ? .cancelToken. cancel ( "canceled " );openAI.edit. editImage (request,onCancel : onCancel);

///CancelData

CancelData ? mCancel;

void onCancel ( CancelData cancelData) {

mCancel = cancelData;

}

mCancel ? .cancelToken. cancel ( "canceled edit image" );openAI.embed. embedding (request,onCancel : onCancel);

///CancelData

CancelData ? mCancel;

void onCancel ( CancelData cancelData) {

mCancel = cancelData;

}

mCancel ? .cancelToken. cancel ( "canceled embedding" );openAI.audio. transcribes (request,onCancel : onCancel);

///CancelData

CancelData ? mCancel;

void onCancel ( CancelData cancelData) {

mCancel = cancelData;

}

mCancel ? .cancelToken. cancel ( "canceled audio transcribes" );openAI.file. uploadFile (request,onCancel : onCancel);

///CancelData

CancelData ? mCancel;

void onCancel ( CancelData cancelData) {

mCancel = cancelData;

}

mCancel ? .cancelToken. cancel ( "canceled uploadFile" ); void getFile () async {

final response = await openAI.file. get ();

print (response.data);

} void uploadFile () async {

final request = UploadFile (file : FileInfo ( 'file-path' , 'file-name' ),purpose : 'fine-tune' );

final response = await openAI.file. uploadFile (request);

print (response);

} void delete () async {

final response = await openAI.file. delete ( "file-Id" );

print (response);

} void retrieve () async {

final response = await openAI.file. retrieve ( "file-Id" );

print (response);

} void retrieveContent () async {

final response = await openAI.file. retrieveContent ( "file-Id" );

print (response);

} void audioTranslate () async {

final mAudio = File ( 'mp3-path' );

final request =

AudioRequest (file : FileInfo (mAudio.path, 'name' ), prompt : '...' );

final response = await openAI.audio. translate (request);

} void audioTranscribe () async {

final mAudio = File ( 'mp3-path' );

final request =

AudioRequest (file : FileInfo (mAudio.path, 'name' ), prompt : '...' );

final response = await openAI.audio. transcribes (request);

} void createSpeech () async {

final request = SpeechRequest (

model : 'tts-1' , input : 'The quick brown fox jumped over the lazy dog.' );

final List < int > response = await openAI.audio

. createSpeech (request : request);

} void embedding () async {

final request = EmbedRequest (

model : TextSearchAdaDoc001EmbedModel (),

input : 'The food was delicious and the waiter' );

final response = await openAI.embed. embedding (request);

print (response.data.last.embedding);

} void createTineTune () async {

final request = CreateFineTuneJob (trainingFile : 'The ID of an uploaded file' );

final response = await openAI.fineTune. createFineTuneJob (request);

} void tineTuneList () async {

final response = await openAI.fineTune. listFineTuneJob ();

} void tineTuneListStream () {

openAI.fineTune. listFineTuneJobStream ( 'fineTuneId' ). listen ((it) {

///handled data

});

} void tineTuneById () async {

final response = await openAI.fineTune. retrieveFineTuneJob ( 'fineTuneId' );

} void tineTuneCancel () async {

final response = await openAI.fineTune. cancel ( 'fineTuneId' );

} void deleteTineTune () async {

final response = await openAI.fineTune. delete ( 'model' );

} void createModeration () async {

final response = await openAI.moderation

. create (input : 'input' , model : TextLastModerationModel ());

} final models = await openAI. listModel (); final engines = await openAI. listEngine ();

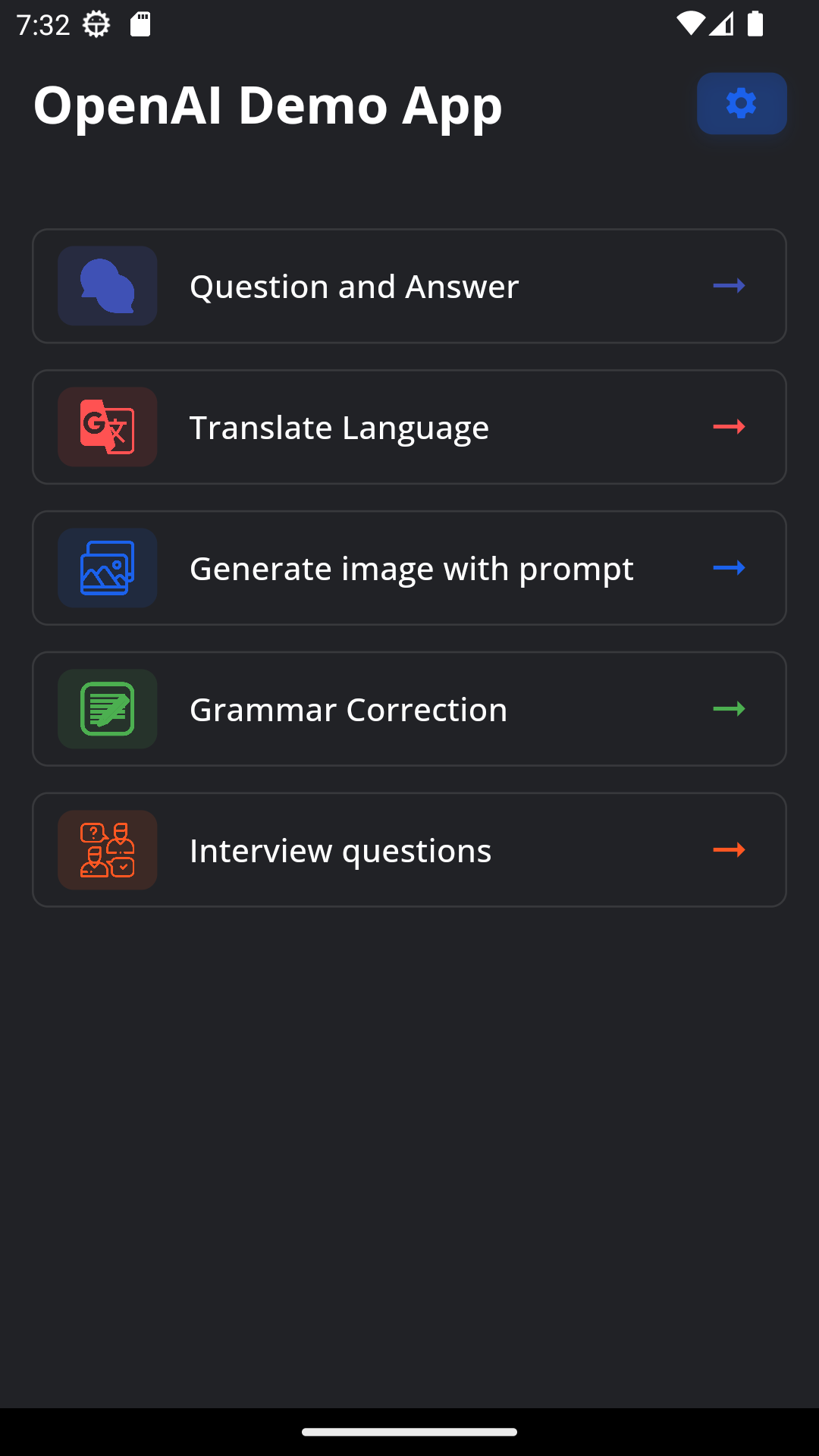

Flutter -Chat -Bot

Flattern erzeugen Bild